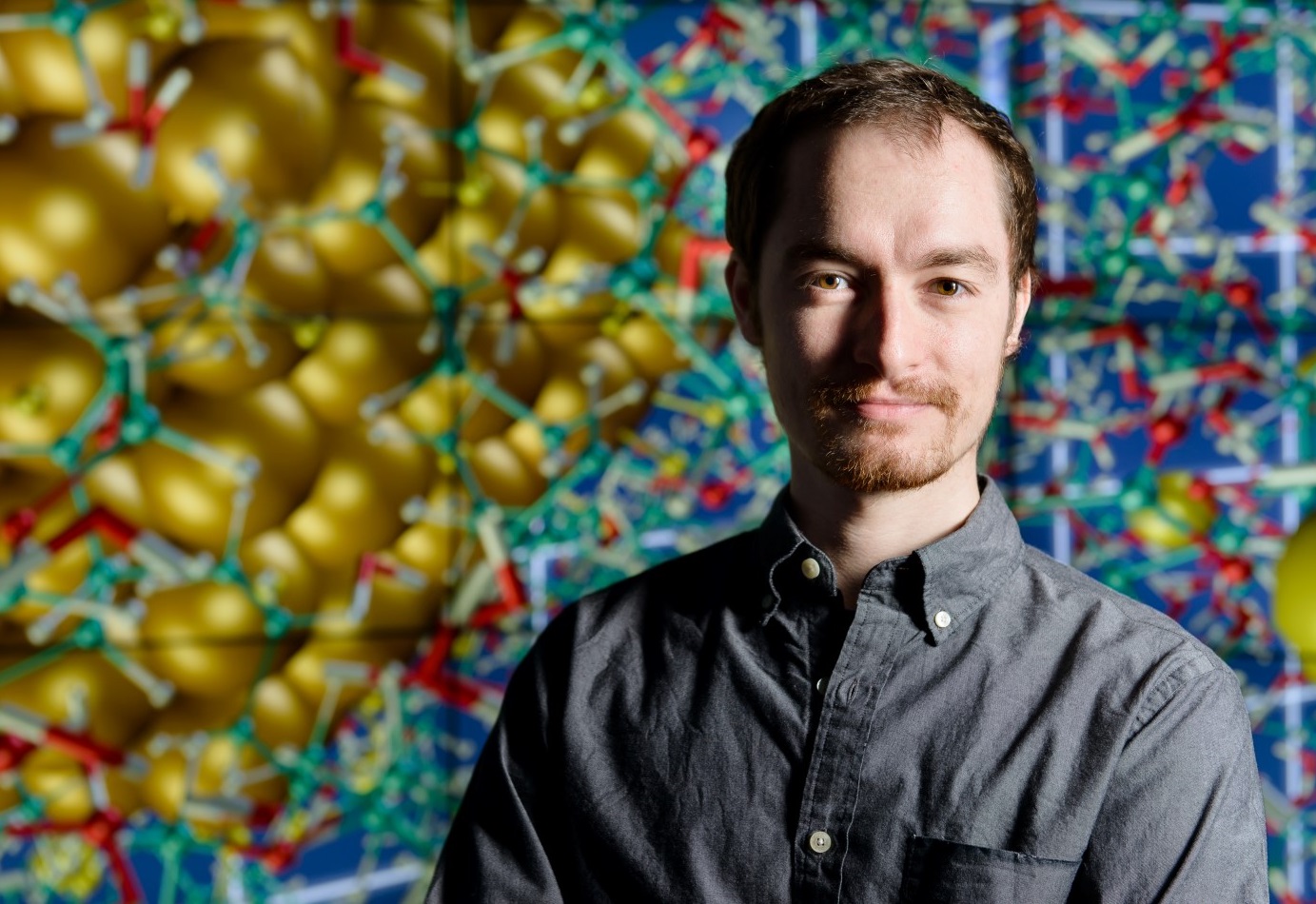

Welcome! I'm Alessandro Febretti. I'm a Research Assistant and Ph.D. Student at the Electronic Visualization Lab of the University of Illinois at Chicago.

This website is a repository of all the projects I'm currently working on or I did in the past, together with my publications, class projects, presentations and stuff I do for fun.

I'm into Virtual Reality and Immersive Systems, Human Computer Interaction, Scientific and Information Visualization, Tiled Display Systems, GPU Programming and Videogame Design.

Current Projects

CAVE2 (October 2012 - Present)

Links:

Porthole (January 2013 - Present)

Porthole is a framework that helps Virtual Environment applications developers to generate decoupled HTML5 interfaces. The goal is to ease the interaction between VE systems and smartphones, tablets, laptops or desktop computers, without the need of ad-hoc client applications. For instance, CAVE2 developers now can use handheld devices to interact and manage their VE applications, just by providing a XML Interfaces Description (XID) File (and, optionally, a Cascading Style Sheets (CSS) File for changing client interface style). Clients can connect to CAVE2 using an HTML5 enabled browser, receive a tailored Graphical User Interface, and manage VE application cameras and parameters. Connection, messaging and streaming management is, hence, transparent to VE applications developers.

Porthole addresses earlier work limitations by proposing a novel Human-Computer Interaction (HCI) model that exploits browsers as a mean of interaction with VE systems. State of art works, instead, present application or Operating System (OS) specific solutions. Moreover, Porthole suggests a new concept of remote collaboration, with respect to Collaborative Virtual Environments (CVEs) model proposed in earlier work in this area.

HANDS (January 2012 - Present)

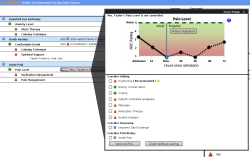

The Hands-on Automated Nursing Data System (HANDS) is a standardized plan of care method in which the patient's plan is updated at every nurse hand-off allowing the interdisciplinary team to track the story about care and progress toward desired outcomes in a standardized format across time and units.

EVL is collaborating with UIC's College of Nursing and College of Engineering on the visual component of the HANDS system. Current goals include: - improving the original HANDS user interface for more potential users such as doctors and therapists. - investigating and understanding the needs of prospective users, and adding additional functionality to HANDS to address the needs of those users. - adding visualization to HANDS existing reports in an effort to empower current and future users to do more data exploration and visual analytics.

Omegalib and the Omicron SDK

omegalib is a runtime environment and application toolkit for tiled displays and immersive and hybrid reality systems. Its main features are: support for cluster rendering; display system scalability; seamless integration with a wide range of input peripherals; support for third-party high-level toolkits, such as VTK and Open Scene Graph. Omegalib applications can be developed in C++ or Python, and can run on heterogeneous systems, from a laptop, to the OmegaDesk to CAVE2.

The omicron SDK is a library providing access to a variety of input devices, mostly used in immersive installations and stereo display systems. Some of the devices currently supported by omicron are:

- All motion capture systems supporting the VRPN protocol

- NaturalPoint trackers (TrackIR, Optitrack)

- Game controllers (Wii, XBox360, PS3)

- Microsoft Kinect (multiple Kinects supported. omicron also offers some functions for Multi-kinect transform calibration)

- SAGE pointer connections

- PQLabs multitouch overlays

- Thinkgear brainwave interfaces

Links:

Share this Page

Share this Page