Introduction

Use the information on this page to install and verify your HDF Kita product from Amazon Web Services (AWS) Marketplace.

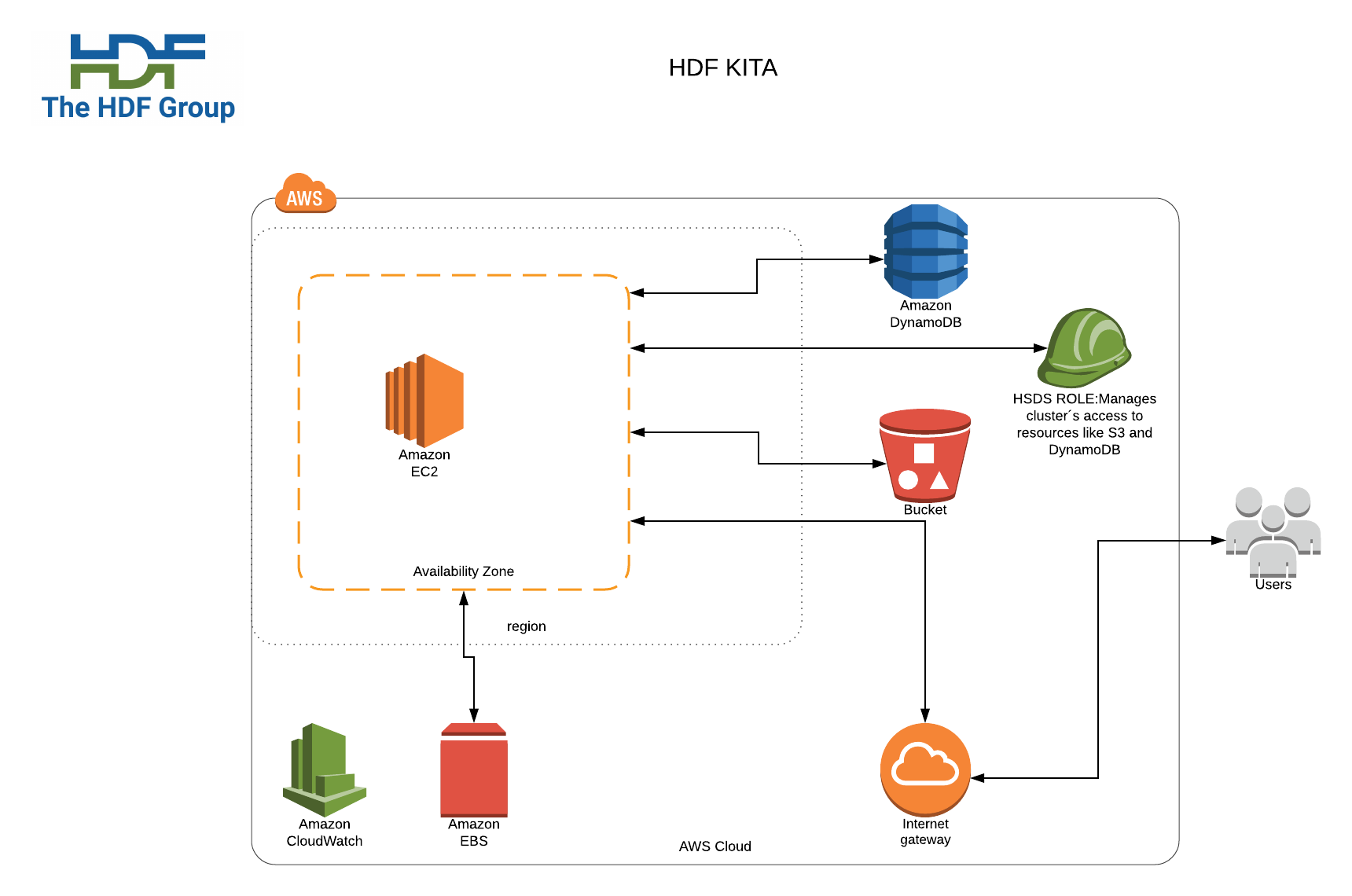

HSDS Stack Architecture

The next image shows a high level view of the most important resources created by the installation:

Installation

Note: In accessing this page, be sure you have already subscribed to our product in AWS MarketPlace.

Choose CloudFormation template

In this release two templates have been developed and they cover the following scenarios:

- Kita Server Default: Deploys an HSDS stack making all configurations automatically; network configuration, resource creation and provisioning

- Kita Server DNS: A slightly different variant that allows the end user to provide a FQDN that will be used as domain name to access the HDF Kita instance. Requires the user to have a domain on AWS Route53

Create an AWS CloudFormation Stack

Now you are ready to install HDF Kita in an AWS CloudFormation Stack. You will do this from the AWS Management Console, using CloudFormation.

Follow these steps:

- From the Kita Server AWS Marketplace listing, select Continue to Subscribe

- In the Subscribe page, review Kita Sever pricing and the End User License Agreement, then click Continue to Configuratiuon

- In the Configuration page, select the Kita Server (Default) or Kita Server (DNS), then click Continue to Launch

- In the Launch page, click Launch

- In the Cloud Formation Create Stack page, click Next to run the Kita Server template.

- In the Specify Details, enter a name for the Stack that contains no spaces or special characters

- On the same page for Parameters, select the desired instance type (HDF Kita will take advantage of the multiple cores available with the larger instance types)

- Also in Parameters, select a EC2 KeyPair for accessing the machine using SSH.

- For the Kita Server DNS template, in the Amazon Domain Configuration, enter the DomainName and Route 53 HostedZoneId

- In HSDS Configuration, enter usernames that will have access to the service (include admin in the list for adminstrative access)

- Also in HSDS Configuration, if using the DNS template, slect true or false for EnableHTTPS

- In SSH Enable Connection, enter the CIDR specification for IP's that will be allowed to SSH into the instance

- In Logs Filtering, enter the desired log level

- Click Next

- In the next page, type in the key:value pairs for any launched instances you wish to keep track of under Tags

- On the same page, select the desired IAM Role, if blank, the account permissions will be used

- On the same page, select a value for Rollback Triggers if desired

- On the same page, change any of the Advanced settings if desired

- Click Next

- Acknowledge that the template may create resources and click Create

Testing the Installation

Next, your cloud stack configuration will assemble all the resources to provide your HDF Kita instance:

- An EC2 instance with service software

- A S3 Bucket to will be used to store HDF data

- A DynamoDB table for usernames and passwords

- A EBS Volume that is used to persist catlogue information across instance restarts

When the CloudFormation page displays CREATE_COMPLETE under Status, HDF Kita is ready to use. In the “Outputs” table are displayed all the AWS resources that have been created. Note that the names are unique so that multiple HDF Kita instances can be created under the account if desired.

The HSDSInstanceDNS gives the public DNS name of the instance. Enter the following url in a browser to test connectivity to the service: http://<DNS_Name>/about. If https has been enabled, use https instead: https://<DNS_Name>/about. The browser should display some JSON text with fields: “about”, “name”, “state”, “hsds_version”, “start_time”, and “greeting”. If the “state” field is “READY”, the service is operational.

Deleting an Installation

In CloudFormation, select the stack, and pick the Delete action. This will delete all AWS resources created by the installation.

Note: You will need to empty the generated S3 bucket before deleting the stack, otherwise the stack removal will fail

Managing User Accounts

User account information is stored in a DynamoB table created by the CloudFormation. The table name is displayed in the CloudFormation Outputs tab, in the HSDSTableName row.

Go to the DynamoDB service in the Management Console, select the Tables item on the left, and then select the table name that was shown in CloudFormation.

The Items tab will show the users and passwords created during installation.

Note: Whenever the DyanmoDB table is modified, the EC2 instance will need to be restarted for the change to take effect.

Note: For security, only trusted IAM users should have access to this table.

Changing Passwords

Select the desired username in the DynamoDB table and the Edit action to modify the password field.

Adding User Accounts

Select Create Item in the DynamoDB table and add the desired username and password.

Deleting User Accounts

Select the desired name in the DynamoDB table and select the Delete action.

Note: This action will not effect any user data that is stored in the S3 Bucket.

Command Line Tools

The command line tools are applications that can be run from anywhere and enable users to view content, upload HDF5 files, download files, and perform other simple tasks.

The command line tools can be used from Linux, OS X, and Windows operating systems.

Installation

On the client system, install pip if not already installed: https://pip.pypa.io/en/stable/installing/.

From the command line, run: $ pip install h5pyd to install the Python SDK (which includes the CLI tools).

Once installed, run: $ hsconfigure at the command prompt. This command will prompt you to enter the endpoint, username, and password. API Key is not used with HDF Kita and can be ignored. For the endpoint enter: http://<DNS_Name> where <DNS_Name> in the DNS endpoint as displayed in the CloudFormation output.

If successful you should see:

Testing connection...

connection ok

Save changes? (Y/N)

Enter ‘Y’ and hsconfigure will save your connection information in a file named .hscfg in your home directory.

The following sections describe the different CLI tools. For any tool you can use: <Tool Name> --help to get detailed information on usage.

hsinfo

This tool returns basic information on the server state and validates the username and password saved in the .hscfg file.

hsls

This tool list either folders and domains at the given path.

Rather than directories and files, HDF Kita uses the terms folders and domains. A folder is used to store collections of folders and domains. A domain is the equivent to a regular HDF5 stored in a POSIX filesystem.

During installation, a home folder is created for each user in the path: /home/<username>. If peterpan is a username, running $hsls /home/peterpan/ will display:

peterpan folder 2018-06-25 17:01:24 /home/peterpan/

1 items

As additional content is created in this folder, those items will be displayed by hsls.

hsload

This tool will upload an HDF5 from the client environment to HDF Kita.

Usage: $ hsload <filename> <folder> to upload a file to the given folder (where it will be saved using the same name as the local file), or $hsload <filename> <domain>, to store to the given domain.

Examples:

hsload myhdf5file.h5 /home/bob/ # creates /home/bob/myhdf5file.h5

hsload myhdf5file.h5 /home/bob/mycloudfile.h5 # creates /home/bob/mycloudfile.h5

Note: There’s no equivalent to the concept of “current working directory” for paths on the server. Always use absolute path names.

Note: By default hsload doesn’t produce any output. Use the -v flag or use the hsls tool to verify the load.

Note: The requesting user most have permissions to write to the folder that will be used to store the domain (see hsacl below).

Note: Non-HDF5 files cannot be loaded. Use h5ls to verify that a file to uploaded is indeed an HDF5 file.

Note: If you need a test file, you can download this one: https://s3.amazonaws.com/hdfgroup/data/hdf5test/tall.h5.

hsget

This tool retrieves a domain from the server an stores it as a ordinary HDF5 file on the client system.

Usage: $ hsget <domain> <filename>

Examples:

hsget /home/bob/myhdf5file.h5 myhdf5file.h5 # stores myhdf5file.h5 in the current directory

hstouch

This tool creates a new folder or a domain (which will contain just the root group) on the server.

Usage: $ hstouch <folder> to create a folder Usage: $ hstouch <domain> to create a domain

Example:

hstouch /home/bob/myfolder

hsrm

This tool removes a folder or domain from the server.

Usage: $ hsrm <folder> to delete a folder Usage: $ hsrm <domain> to delete a domain

Example:

hsrm /home/bob/myhdf5file.h5

hsacl

This tools can be used to display or modify ACLs (Access Control List) for folders or domains. The ACL for a given user controls which actions (“create”, “read”, “update”, “delete”, “read ACL”, “update ACL”) can be performed (either via the CLI tools, or programmatically).

Run: hsacl --help for details on usage.

Also, this blog post: https://www.hdfgroup.org/2015/12/serve-protect-web-security-hdf5/ describes the design and use of ACLs as well as well as other web security related issues.

Note: The admin user has the ability to perform any action regardless of the existing ACL. To prevent accidental erasure of data

Python SDK

The Python Package h5pyd can be used to create Python programs that read or write data to HDF Kita.

Usage is largely compatible with the popular h5py package for HDF5 files. See: http://docs.h5py.org/en/stable/ for documentation of h5py.

Example:

import h5pyd

f = h5pyd.File("/home/bob/anewfile.h5", "w") # create a domain on the server

f.create_group("mysubgroup") # create a group

f.close() # close the file

C SDK

A plugin to the HDF5 library, the HDF5 REST VOL supports reading and writing data with C, C++, and Fortran applications. See: https://bitbucket.hdfgroup.org/users/jhenderson/repos/rest-vol/browse for information and downloading, building, and using the REST VOL.

JavaScript

Currently there is no JavaScript package to facilitate using HDF Kita from within Web Applications. The REST API is documented here: http://h5serv.readthedocs.io/.

The following blog post describes developing a web-based application using the HDF REST API: https://www.hdfgroup.org/2017/04/the-gfed-analysis-tool-an-hdf-server-implementation/.