Formal Languages - Udacity

Functions and Computable Functions - (Udacity,Youtube )

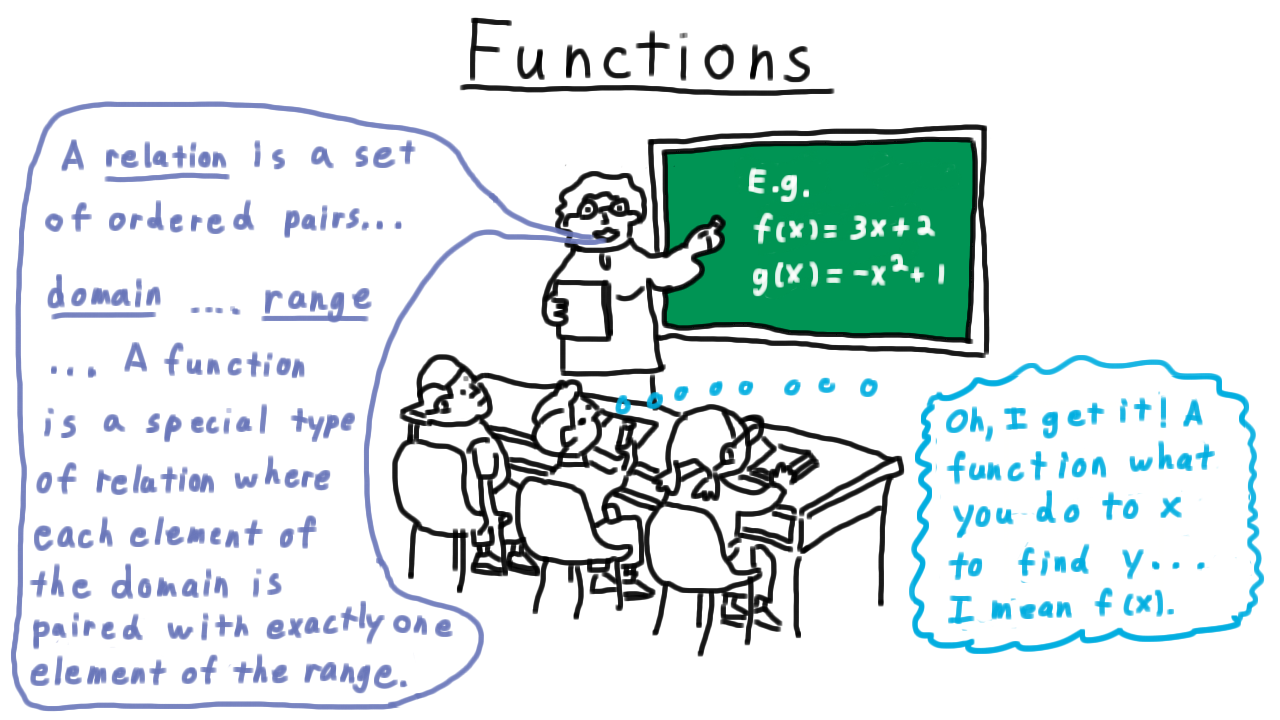

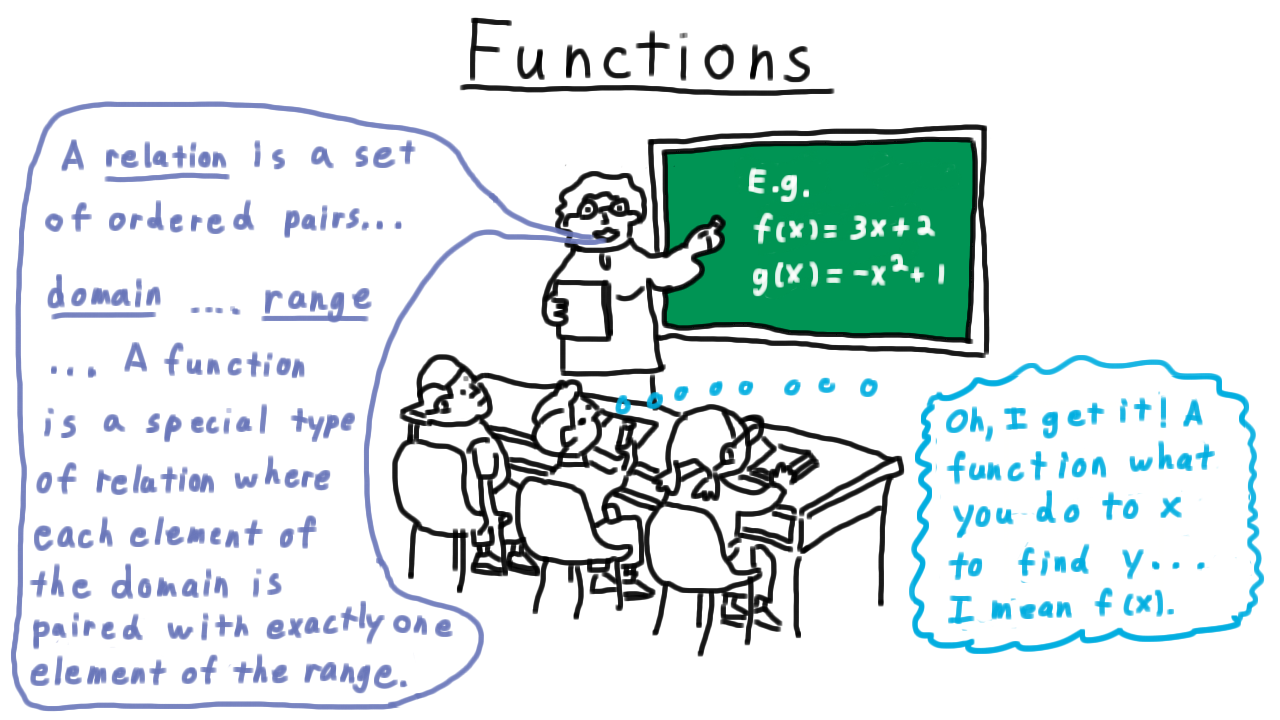

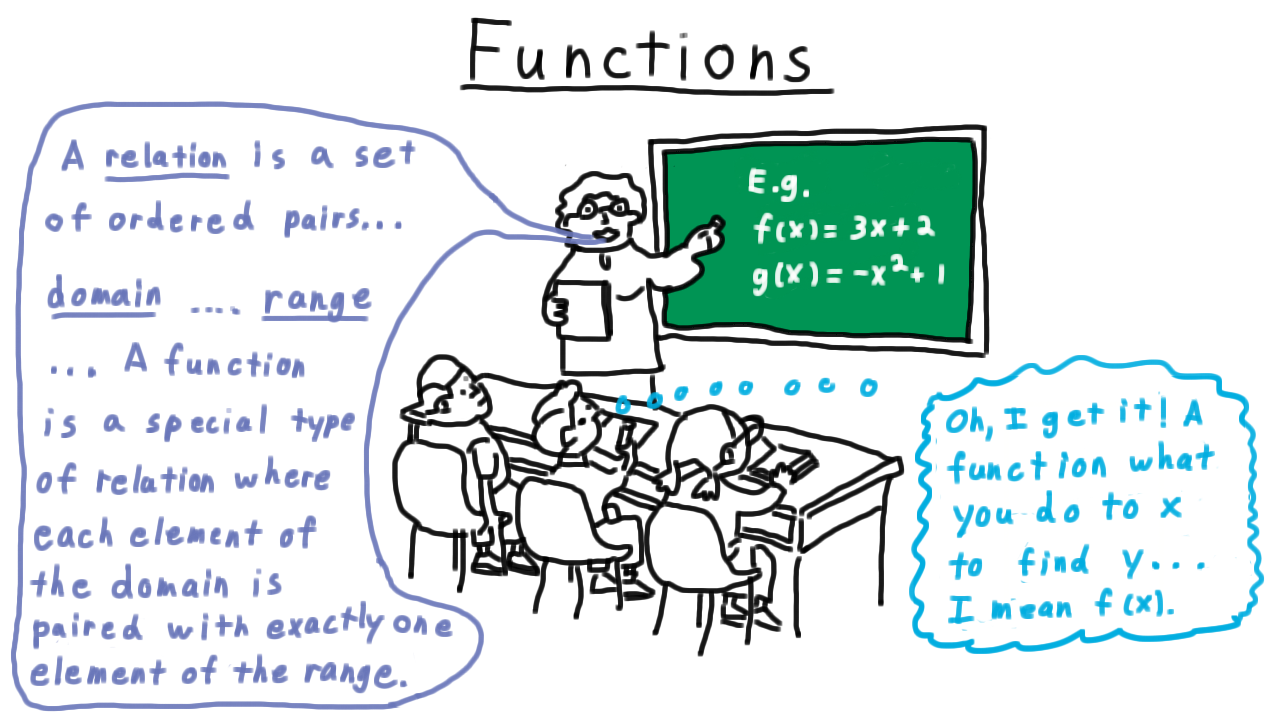

In school, it is common to develop a certain mental conception of a function. This conception is often of the form:

A function is a procedure you do to an input (often called x) in order to find an output (often called f(x)).

However, this notion of a function is much different from the mathematical definition of a function:

A function is a set of ordered pairs such that the first element of each pair is from a set X (called the domain), the second element of each pair is from a set Y (called the codomain or range), and each element of the domain is paired with exactly one element of the range.

The first conception—the one many of us develop in school—is that of a function as an algorithm: a finite number of steps to produce an output from an input, while the second conception—the mathematical definition—is described in the abstract language of set theory. For many practical purposes, the two definitions coincide. However, they are quite different: not every function can be described as an algorithm, since not every function can be computed in a finite number of steps on every input. In other words, there are functions that computers cannot compute.

Rules of the Game - (Udacity, Youtube)

When you hear the word computation, the image that should come to mind is a machine taking some input, performing a sequences of operations, and after some time (hopefully) giving some output.

In this lesson, we will focus on the forms of the inputs and output and leave the definition of the machine for later.

The inputs read by the machine must be in the form of strings of characters from some finite set, called the machine’s alphabet. For example, the machine’s alphabet might be binary (0s and 1s), it might be based on the genetic code (with symbols A, C, G, T), or it might be the ASCII character set.

Any finite sequence of symbols is called a string. Thus, ‘0110’ is a string over the binary alphabet. Strings are the only input data type that we allow in our model.

Sometimes, we will talk about machines having string outputs just like the inputs, but more often than not, the output will just be binary–an up or down decision about some property of the input. You might imagine the machine just turning on one of two lights, one for accept, or one for reject, once the machine is finished computing.

With these rules, an important type becomes a collection of strings. Maybe, it’s the set of strings that some particular machine accepts, or maybe we are trying to design a machine so that it accepts strings in a certain set and no others, or maybe we’re asking if it’s even possible to design a machine that accepts everything in some particular set and no others. In all these cases, it’s a set of strings that we are talking about, so it makes sense to give this type its own name.

We call a set of strings a language. For example, a language could be a list of names, It could be the set of binary strings that represent even numbers–notice that this set is infinite–or it could be the empty set. Any set of strings over an alphabet is a language.

Operations on Languages - (Udacity, Youtube)

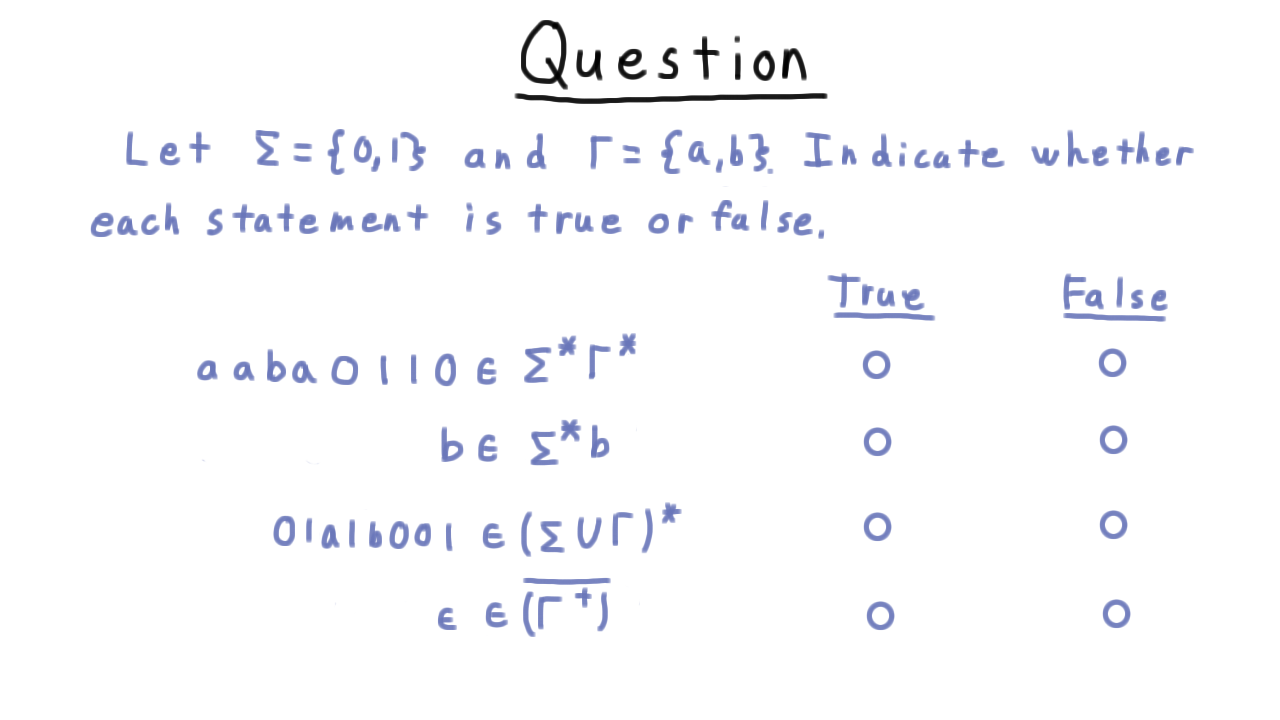

The concept of a language is fundamental to this course, so we’ll take a moment to describe common operations used to manipulate languages. For examples we’ll use the languages \(A = \){’0‘,10’} and \(B = \) {’0’,‘11’} over the zero-one alphabet.

Since languages are sets, we have the usual operations of union, intersection and complement defined for them. For example \(A\) union \(B\) consists of the three strings ‘0’,‘10’, and ‘11’. The string ‘0’ comes from both \(A\) and \(B\), ‘10’ from \(A\) and ‘11’ from \(B\). The intersection contains only those strings in both languages, just the string ‘0’.

To define the complement of a language we need to make clear what it is that we are completing. It’s not sufficient just to say that it is everything not in \(A\). For \(A\) that would include strings with characters besides 0 and 1 or maybe even infinite sequences of 0’s and 1’s, which we don’t want. The complement, therefore, is defined so as to complete the set of all strings over the relevant alphabet, in this case the binary alphabet. The alphabet over which the language is defined is almost always clear from context. In this case, the complement of \(A\) will be infinite.

In addition to these standard set operations, we also define an operation for concatenating two languages. The concatenation of \(A\) and \(B\) is just all strings you can form by taking a string from \(A\) and appending a string from \(B\) to it. In our examples, this set would be ‘00’ with first 0 coming from \(A\) and second from \(B\). The string ‘011’ with the ‘0’ coming from \(A\) and the ‘11’ coming from \(B\), and so forth. Of course, we can also concatentate a language with itself. Instead of writing \(AA\), we often write \(A^2\). In general, when we want to concatenate a language with itself k times we write \(A\) to the kth power. Note that for \(k=0\), this defined as the language containing exactly the empty string.

When we want to concatenate any number of strings from a language together to form a new language, we use an operator known as Kleene star. This can be thought of as the union of all possible powers of the language. When we want to exclude the emptry string, we use the plus operator, which insists that at least one string from A be used. Notice the difference in starting indices. So for example, the string ‘01001010’ is in \( A^*\). There is a way that I can break it up so that each part is in the language \(A\), and so as a whole the string can be thought of a concatenation of strings from \(A\). Note that even \(A^*\) doesn’t include infinite sequences of symbols. Each individual string from A must be of finite length, and you are only allowed to concatenate a finite number together.

For those who have studied regular expressions, this should seem quite familiar. In fact, one gets the notation of regular expressions by treating individual symbols a languages. For example, \(0^*\) is the set of all strings consisting entirely of zeros. Here we are treating the symbol 0 as a language unto itself. We will also commonly refer to \(\Sigma^*\), meaning all possible strings over the alphabet \(\Sigma\). Here we are treating the individual symbols of the alphabet as strings in the language \(\Sigma\).

Language Operations Exercise - (Udacity)

Countability (Part 1) - (Udacity, Youtube)

We need one more piece of mathematical background before we can more formally prove our claim that not all functions are computable. Intuitively, the proof will show that there are more languages than there are possible machines. The set of possible computer programs is countably infinite, but the set of languages over an alphabet in uncountably infinite.

If you aren’t familiar with the distinction between countable and uncountable sets already, you may be thinking to yourself “inifinity is a strange enough idea by itself; now I have to deal with two of them!” Well, it isn’t as bad as all that. A countably infinite set is one that you can enumerate–that is, you can say this is the first element, this is the second element, and so forth, and –this is the really important part– eventually give a number to every element of the set. For some sets an enumeration straightforward. Take the even numbers. We can say that 2 is the first one, 4 the second, 6 the third and so forth. For some sets, like the rationals you have to be a little clever to find an enumeration. We’ll see the trick for enumerating them in a little bit. And for some sets, like the real numbers, it doesn’t matter how clever you are; there simply is no enumeration. These are the uncountable sets.

Let us make the following definition:

A set S is countable if it is finite or if it is in one-to-one correspondence with the natural numbers.

A one-to-one correspondence, by the way is a function that is one-to-one, meaning that no two elements of the domain get mapped to the same element of the range, and also onto, meaning the every element of the range is mapped to by an element of the domain. For example, here is a one-to-one correspondence between the integers 1 through 6 and the set of permutations of three elements.

Now, in general, the existence of a one-to-one correspondence implies that the two sets have the same size– that is, the same number of elements. And this actually holds even for infinite sets. This is why we say that there are as many even natural numbers as there are natural numbers: because it’s easy to establish a one-to-one correspondense between the two sets, f(n) = 2n for example. Some examples of countably infinite sets are:

- The set of nonnegative even numbers (a one-to-one correspondence to the natural numbers is \(f(x) = x/2)\)

- The set of positive odd numbers (with correspondence \(f(x) = (x-1)/2\)

- The set of all integers (with correspondence \(f(x) = 2x\) if \(x\) is nonnegative and \(f(x) = -2x - 1\) if \(x\) is negative)

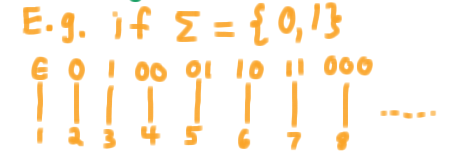

For our purposes, we want to show that the set of all strings over a finite alphabet is countable. Since computer programs are always represented as finite strings, this will tell us that the set of computer programs is countable. The proof is relatively straightforward. Recall that \(\Sigma*\) is the union of strings of size 0 with those of size 1 with those of size 2, etc. Our strategy will just be to assign the first number to \(\Sigma^0\), the next numbers \(\Sigma^1\), the next to \(\Sigma^2\), etc. Here is the enumeration for the binary alphabet.

The key is that every string gets a positive integer mapped to it under this scheme. Therefore,

The set of all strings over any alphabet is countable.

Countability (Part 2) - (Udacity, Youtube)

This same argument shows that

A countable union of finite sets is countable.

Suppose that our set of sets is \(S_0, S_1,…\) etc. Without loss of generality, we’ll suppose that they are disjoint. (If they happen not to be disjoint, we can always make them so by subtracting out from \(S_k\) all the elements it shares with sets \(S_0...S_k-1\).)

Then the argument proceeds just as before. We assign the first numbers to \(S_0\), the next to \(S_1\), etc. Every element in the union must have a first set \(S_k\) that it belongs to, and thus it will be counted in the enumeration.

It turns out that we can actually prove something even stronger than this statement here.

We can replace this word finite with the word countable, and say that

A countable union of countable sets is countable.

Notice that our current proof doesn’t work. If we tried to count all of the elements of \(S_0\) before any of the elements of \(S_1\), we might never get to the elements of \(S_1\), or any other set besides \(S_0\). Nevertheless, this theorem is true. For convenience of notation, we let the elements of \(S_k\) be \(\{x\_{k0}, x\_{k1},…\}\) and then we can make each set \(S_k\) a row in a grid.

Again, we can’t enumerate row-by-row here because we would never finish the first row. On the other hand, can go diagonal-by-diagonal, since each diagonal is finite. The union of all the set S_k is the union of all the rows, but that is the same as the union of all the diagonals. Each diagonal being finite, we can then apply the original version of the theorem to prove that a countable union of countable sets is countable.

Note that his idea proves that the rationals are countable. Imagine putting all fractions with a 1 in the numerator in the first row, all those with a 2 in the numerator in the second row, etc.

A False Proof - (Udacity)

Languages are Uncountable - (Udacity, Youtube)

So far, we’ve seen that the set of strings over an alphabet in countable. But what about all subsets of these strings? What about the set of all languages. It turns out that this set is uncountable.

The set of all languages over an alphabet is uncountable.

For the proof, we’ll suppose not. That is, suppose there is an enumeration of the languages \(L_1, L_2, \ldots \) over an alphabet \(\Sigma\). Also, let \(x_1, x_2,\ldots \) be the strings in \(\Sigma^*\). We are then going to build a table, where the columns correspond to the strings from Sigma* and the rows correspond to the languages. In each entry in the table, we’ll put a 1 if the string is in the language and a 0 if it is not.

Now, we are going to consider a very sneaky language defined as follows: it consists of the those strings \(x_i\) for which \(x_i\) is not in the language \(L_i\). In effect, we’ve taken the diagonal in this table and just reversed it. Since we are assuming that the set of languages is countable, this language must be \(L_k\) for some \(k\). But is \(x_k\) in \(L_k\) or not? From the table, the row \(L_k such\) that in every column the entry is the opposite of what is on the diagonal. But the diagonal entry can’t be the opposite of itself.

If \(x_k\) is in \(L_k\), then according to the definition of \(L_k\), it should not be in \(L_k\). On the other hand, if \(x_k\) is not in \(L_k\), then it should be in \(L_k\). Another way to think about this argument is to say that this oppositie-of-the-diagonal language must be different from every row in the table because it is different from the diagonal element. In any case, we have a contradiction and can conclude that this enumeration of the languages was invalid since the rest of our reasoning was sound. This argument here is known as the diagonalization trick, and we’ll see it come up again later, when we discuss Undecidability.

Consequences - (Udacity, Youtube)

Although they may not be immediately apparent, the consequences of the uncountability of languages are rather profound.

We’ll let \(\Sigma\) be the set of ASCII characters–these are all the ones you would need to program–

and observe that the set of strings that represent valid python programs is a subset of \(\Sigma^*\). Each program is a finite string, after all. (The choice of python arbitrarily– any language or set of languages works.) Since this set is subset of the countable set \(\Sigma^*\), we have it is countable. Thus, there are a countable number of python programs.

On the other hand, consider this fact. For any language \(L\), we can define \(F_L\) to be the function that is 1 if x is in \(L\) and 0 otherwise. All such functions are distinct, so the set of these functions must be uncountable, just like the set of all languages.

Here is the profound point, since the set of valid python programs is countable but the set of functions is not, it follows that there must be some functions that we just can’t write programs for. In fact, there are uncountably many of them!

So going back to our picture of the HS classroom, we can see that the teacher, perhaps without realizing it was talking about something much more general that what the student ended up thinking. There are only countably many computer programs that can follow a finite number of steps as the student was thinking, but there are uncountably many functions that fit the teachers definition.

Computability and Algorithms - Course Notes

Turing Machines - (Udacity)

Motivation - (Udacity, Youtube)

In the last lesson we talked informally about what it means to be computable. But what even is a computer? What kinds of problem can we solve on a computer? What problem can’t be solved?

To answer these questions we need a model of computation. There are many, many ways we could define the notion of computability–ideally something simple and easy to describe, yet powerful enough so this model can capture everything any computer can do, now or in the future.

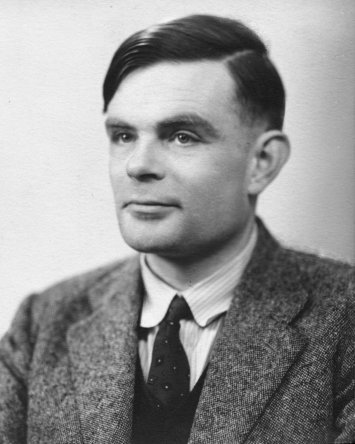

Luckily one of the very first mathematical model of a computer serves us quite well, a model developed by Alan Turing in the 1930’s. Decades before we had digital computers, Turing developed a simple model to capture the thinking process of a mathematician. This model, which we now call the Turing machine, is an extremely simple device and yet completely captures our notion of computability.

In this lesson we’ll define the Turing machine, what it means for the machine to compute and either accept or reject a given input. In future lessons we’ll use this model to give specific problems that we cannot solve.

Introduction - (Udacity, Youtube)

In the last lesson, we began to define what computation is with the goal of eventually being precise about what it can and cannot do. We said that the input to any computation can be expressed as a string, and we assumed that, whatever instructions there were for turning the input into output, that these too could be expressed as a string. Using a counting argument, we were able to show that there were some functions that were not computable.

In this lesson, we are going to look at how input gets turned into output more closely. Specifically, we are going to study the Turing machine, the classical model for computation. As we’ll see in a later lesson, Turing machines can do everything that we consider as computation, and because of their simplicity, they are a terrific tool for studying computation and its limitations. Massively parallel machines, quantum computers they can’t do anything that a Turing machine can’t also do.

Turing machines were never intended to be practical, but nevertheless several have been built for illustrative purposes, including this one from Mike Davey.

The input to the machine is a tape onto which the string input has been written. Using a read/write head the machine turns input into output through a series of steps. At each step, a decision is made about whether and what to write to the tape and whether to move it right or left. This decision is based on exactly two things:

- the current symbol under the read-write head, and

- something called the machines state, which also gets updated as the symbol is written.

That’s it. The machine stops when it reaches one of two halting states named accept and reject. Usually, we are interested in which of these two states the machine halts in, though when we want to compute functions from strings to strings then we pay attention to the tape contents instead.

It’s a very interesting historical note that in Alan Turing’s 1936 paper in which he first proposed this model, the inspiration does not seem to come from any thought of a electromechanical devise but rather from the experience of doing computations on paper. In section 9, he starts from the idea of a person who he call the computer working with pen and paper, and then argues that his proposed machine can do what this person does.

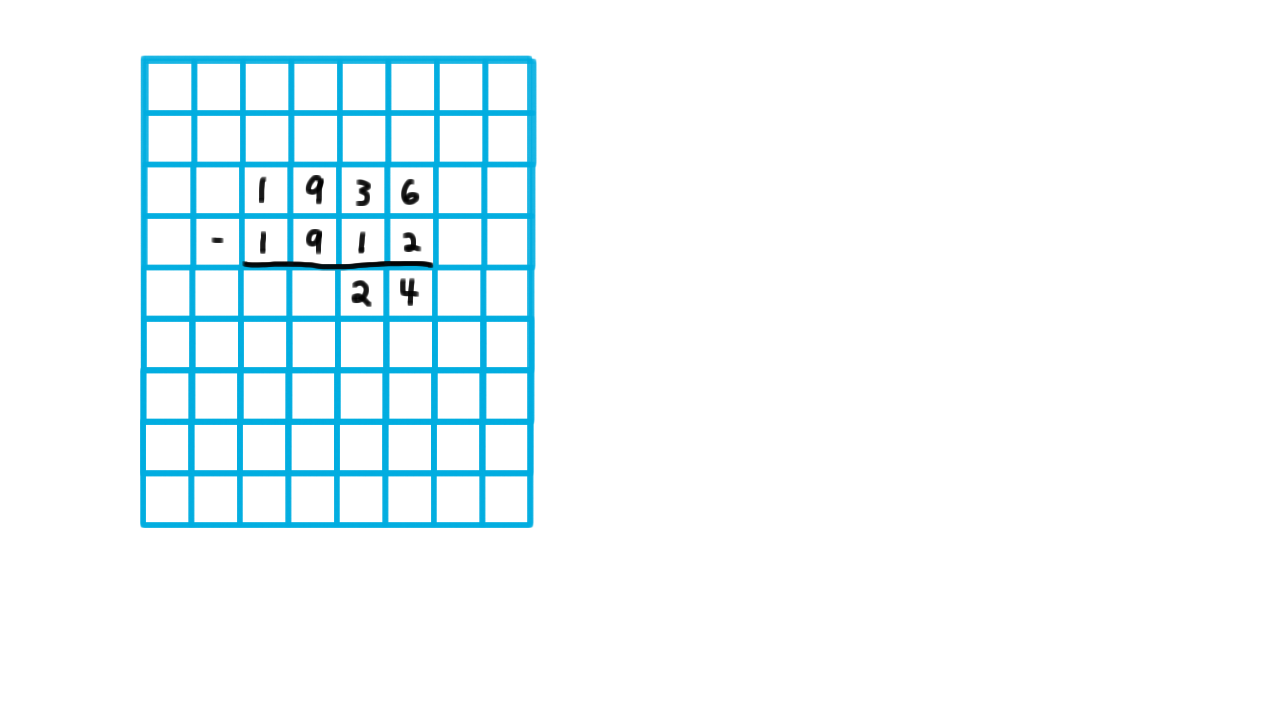

Let’s follow his logic by considering my computing a very simple number: Alan Turing’s age when he wrote the paper.

\[1936 - 1912 = 24.\]

Turing argues that any calculuation like this can be done on a grid.

Like a child arithmetic book, he says. By this he means something like wide-ruled graph paper. He argues that all symbols can be made to fit inside of one of these squares.

Then he argues, that the fact that the grid is two-dimensional is just a convenience, so he takes away the paper and says that computation can done on tape consisting of a one-dimensional sequence of squares. This isn’t convenient for me, but it doesn’t limit what the computation I can do.

Then points out that there are limits to the width of human perception. Imagine I am reading in a very long mathematical paper, where the phrase “hence by theorem this big number we have …” is used. When I look back, I probably wouldn’t be sure at a glance that I had found the theorem number. I would have to check, maybe four digits at a time, crossing off the ones that I had matched so as to not lose my place. Eventually, I will have matched them all and can re-read the theorem.

Since Turing was going for the simplest machine possible, he takes this idea to the extreme and only let’s me read one symbol be read at a time, and limits movement to only one square at time, trusting to the strategy of making marks on the tape to record my place and my state of mind to accomplish the same things as I would under normal operation with pen and paper. And with those rules, I have become a Turing machine.

So that’s the inspiration, not a futuristic vision of the digital age, but probably Alan Turing’s own everyday experience of computing with pen and paper.

Notation - (Udacity, Youtube)

Now that we have some intuition for the Turing machine, we turn to the task of establishing some notation for our mathematical model. Here, I’ve used a diagram to represent the Turing machine and its configuration.

We have the tape, the read/write head, which is connected to the state transition logic and a little display that will indicate the halt state–that is, the internal state of the Turing machine when it stops.

Mathematically, a Turing machine consists of:

A finite set of states \(Q\). (Everything used to specify a Turing machine is finite. That is important.)

An input alphabet of allowed input symbols. (This must NOT include the blank symbol which we will notate with this square cup most of the time. For some of the quizzes where we need you to be able to type the character we will use ‘b’. We can’t allow the input alphabet to include the blank symbol or we wouldn’t be able to tell where the input string ended.)

A tape alphabet of symbols that the read/write head can use (this WILL include the blank symbol)

It also includes a transition function from a (state,tape symbol) to a (state, tape symbol, direction) triple. This, of course, tells the machine what to do. For every, possible current state and symbol that could be read, we have the appropriate response: the new state to move to, the symbol to write to the tape (make this same an the read symbol to leave it alone), and the direction to move the head relative to the tape. Note that we can always move the head to the right, but if the head is currently over the first position on the tape, then we can’t actually move left. When the transition function says that the machine should be left, we have it stay in the same position by convention.

We also have a start state. The machine always starts in the first position on the tape and in this state.

Finally, we have an accept state,

and a reject state. When these are reached the machine halts its execution and displays the final state.

At first, all of this notation may seem overwhelming–it’s a seven-tuple after all. Remember, however, that all the machine ever does is to respond to the current symbol it sees based on its current state. Thus, it’s the transition function that is at the heart of the machine and most all of the important information like the set of states and the tape alphabet is implicit in it.

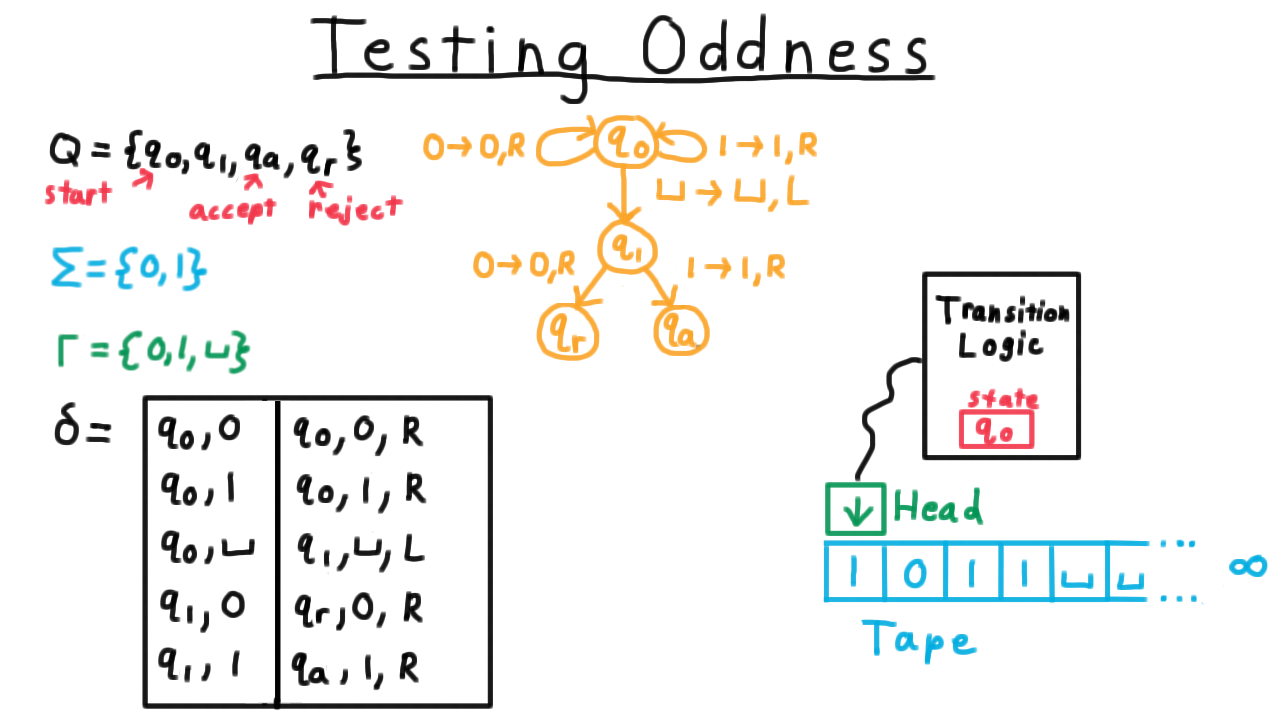

Testing Oddness - (Udacity, Youtube)

For our first Turing machine example, consider one that tests the oddness of a binary representation of a natural number. Note that I’ve cheated here in the transition function by including only (state,symbol) pairs in the domain that we would actually encounter during computation. By convention, if there is no transition is specified for the current (state,symbol) pair, then the program just halts in the reject state.

One convenient way represent the transition function, by the way, is with a state diagram, similar to what is often used for finite automata for those familiar with that model of computation. Each state gets its own vertex in a multigraph and every row of the transition table is represented as an edge. The edge gets labeled with remaining information besides the two states, that is the symbol that is read, the one that is written and the direction in which the head is moved.

See if you can trace throught the operation of the Turing machine for the input shown. If you are unsure, watch the video.

Configuration Sequences - (Udacity, Youtube)

Recall that a Turing machine always starts in the initial state and with the head at the first position on the tape. As it computes, it’s internal state, the tape contents, and the position of the head will change, but everything else will stay the same. We call this triple of state, tape content, and head position a configuration, and any given computation can be thought of as a sequence of configurations. It starts with the initial state, the input string and with the head position on the first location, and it proceeds from there.

Now, it isn’t very practical always draw a picture like this one every time that we want to refer to the configuration of a Turing machine, so we develop some notation that captures the idea.

We’ll do the same computation again, but this time we’ll write down the configuration using this notation. We write the start configuration as \(q_0\)1011. The part to the left of the state represents the contents of the tape to the left of the head. It’s just the empty string in this case. Then we have the state of the machine and then the rest of the tape contents.

After the first step, 1 is to the left of the head, we are state \(q_0\) still and ‘1’ is the string to the right. In the next, configuration ‘10’ is to the left, we are still in state \(q_0\) and ‘11’ to right,and so on and so forth.

This notation is a little awkward, but it’s convenient for typesettings. It’s also very much in the spirit of Turing machines, where all structured data must ultimately be represented as strings. If a Turing machine can handle working with data like this, then so can we.

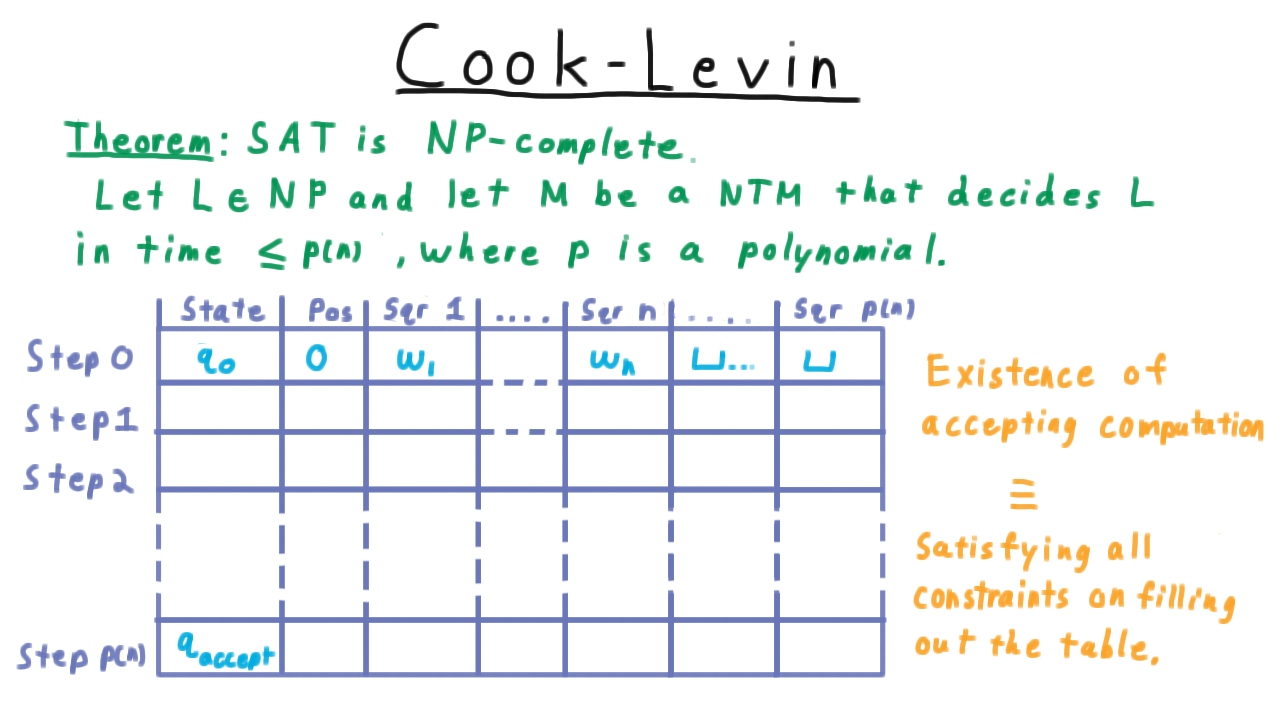

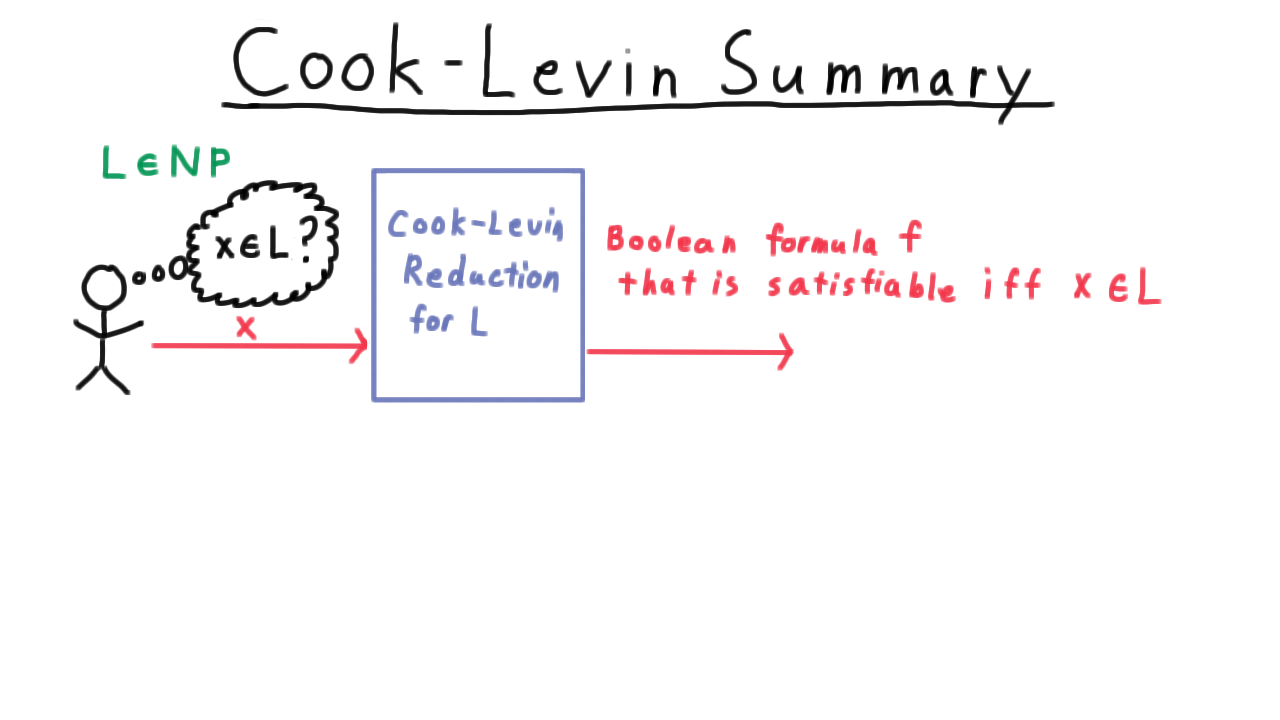

At a slightly higher level, a whole sequence of configurations like this captures everything that a Turing machine did on a particular input, and so we will sometimes call such a sequence a computation. And actually, this representation of a computation will be central as when we discuss the Cook-Levin theorem in the section on complexity.

The Value of Practice - (Udacity, Youtube)

Next, we are going get some practice tracing though Turing machine computations and programming Turing machines. The point of these exercises is not, so that you can put on your resume that you are good at programming Turing machines. And if someone asks you in an interview to write a program to test whether an input number is prime, I wouldn’t recommend trying to draw a Turing machine state diagram. Somehow, I doubt that will land you the job.

Rather, the point is to help you convince yourself that Turing machines can do everything that we mean by computation, and if you really had to, you could program a Turing machine to do anything that your could write a procedure to do in your favorite programming language. There are two ways to convince yourself of this. One is to just practice so that you build up enough of a facility with programming them – that is to say, it becomes easy enough for you– so that it just seems intuitive that you could do anything. Another way is to show that a Turing machine can simulate the action of models that are closer to real-world computers like the Random Access Model. We’ll do that in a later lesson. But, to be able to understand these simulation arguments, you need a pretty good facility with how Turing machines work anyway, so make the most of these examples and exercises.

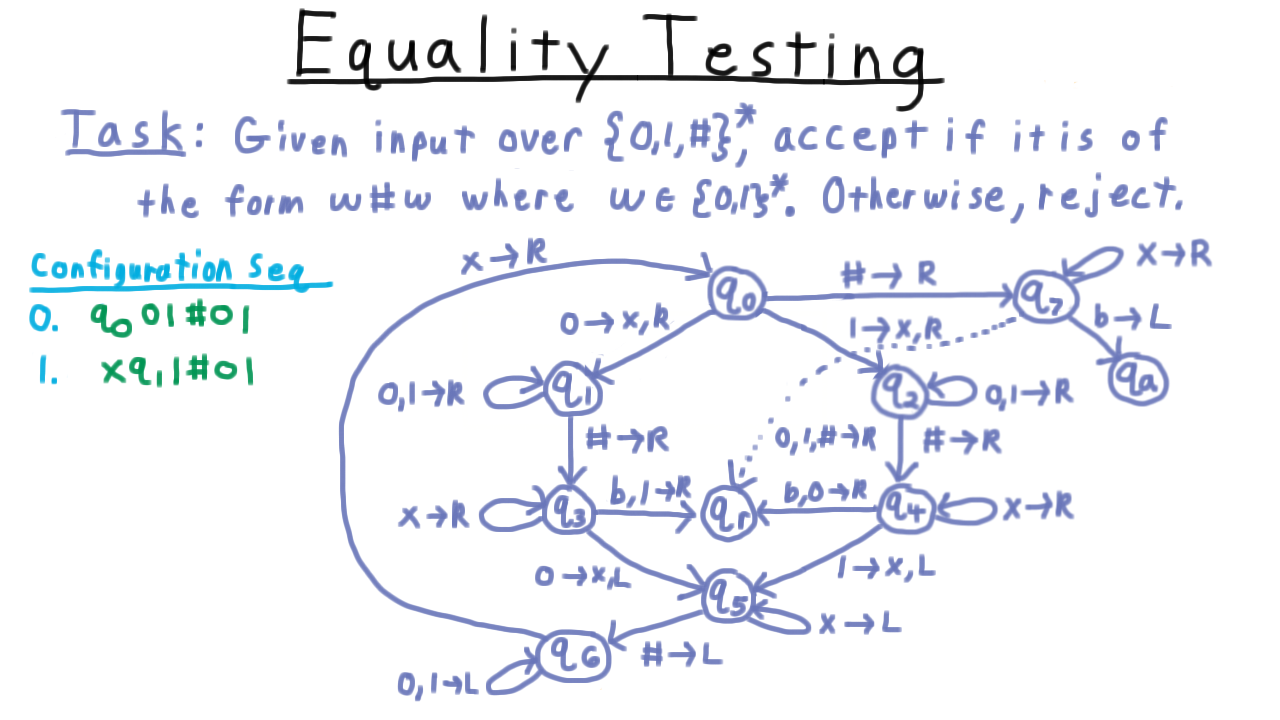

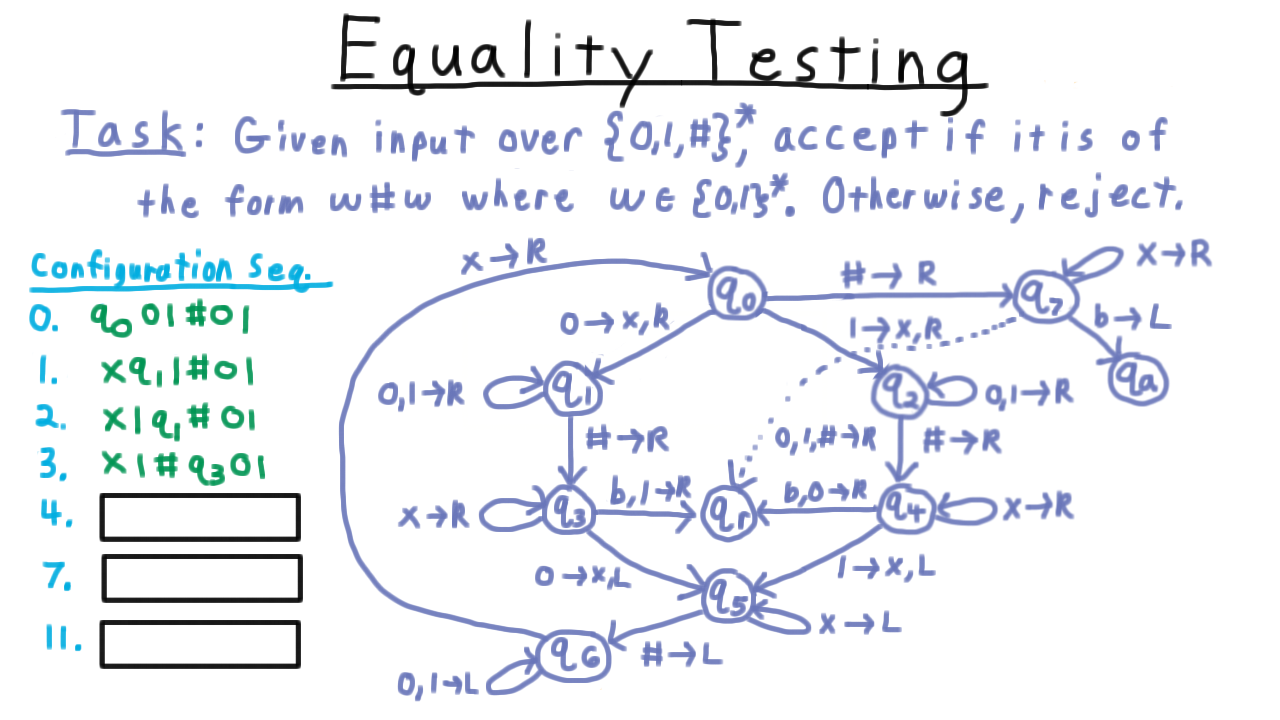

Equality Testing - (Udacity, Youtube)

To illustrate some of the key challenges in computing with Turing machines and how to overcome them, we’ll examine this task here, where we are given an input string, and want to tell if it is of the form w#w, where w is a binary string. In other words, we want to test whether the string to the left of the first hash is the same as the string to the right of it.

(Watch the video for an illustration on this example.)

As Turing machines go this is a pretty simple program, but as you can see here the state diagram gets a little messy. Like the Sisper textbook, I’ve used a little shorthand here in the diagram. When two symbols appear to the left of the arrow, I mean to either one of those. It’s easier than writing out a whole other edge. Also, sometimes, I will only give a direction on the right. Interpret that to mean that the tape should be left alone.

(Watch the video for an illustration of the state transitions in the diagram.)

Configuration Exercise - (Udacity)

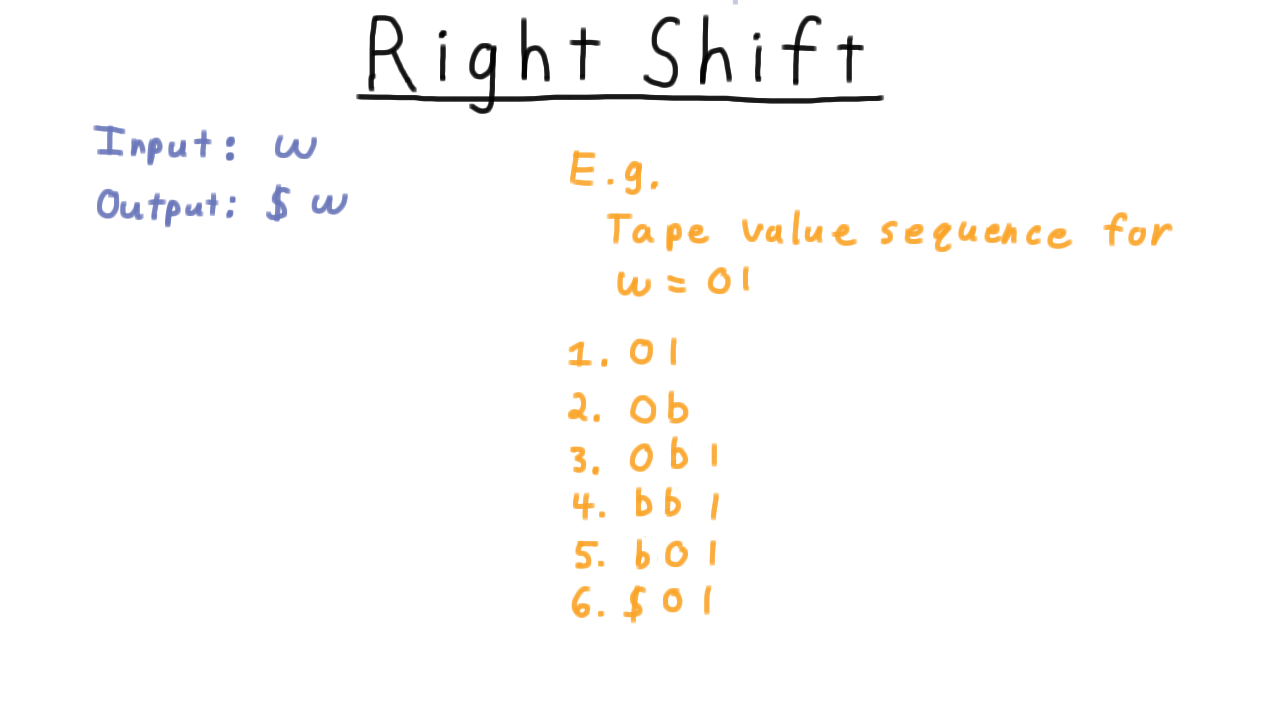

Right Shift - (Udacity)

Now, I want you to actually build a Turing machine. The goal is to right-shift the input and place a dollar sign symbol in front of the input and accept. Unlike our previous examples, accept vs. reject isn’t important here. This is more like a subroutine within a larger Turing machine that you might want to build, though you could think about it as a Turing machine that computes a function from all strings over the input alphabet to string over the tape alphabet, if you like.

Balanced Strings - (Udacity)

Language Deciders - (Udacity, Youtube)

If you completed all those exercises, then you are well on your way towards understanding how Turing machines compute, and hopefully, also are ready to be convinced that they can compute as well as any other possible machine.

Before we move onto that argument, however, there is some terminology about Turing machines and the languages that they accept and reject those that they might loop on that we should set straight. Some of the terms may seem very similar, but the distinctions are important ones and we will use them freely throughout the rest of the course, so pay close attention. First we define what it means for a machine to decide a language.

A Turing machine decides a language L if and only if accepts every x in L and rejects every x not in L.

For example, the Turing machine we just described decided the langauge L consisting of strings of the form w#w, where w is a binary string. We might also say the Turing machine computed the function that is one if x is in L and 0 otherwise. Or even just that the Turing machine computed L.

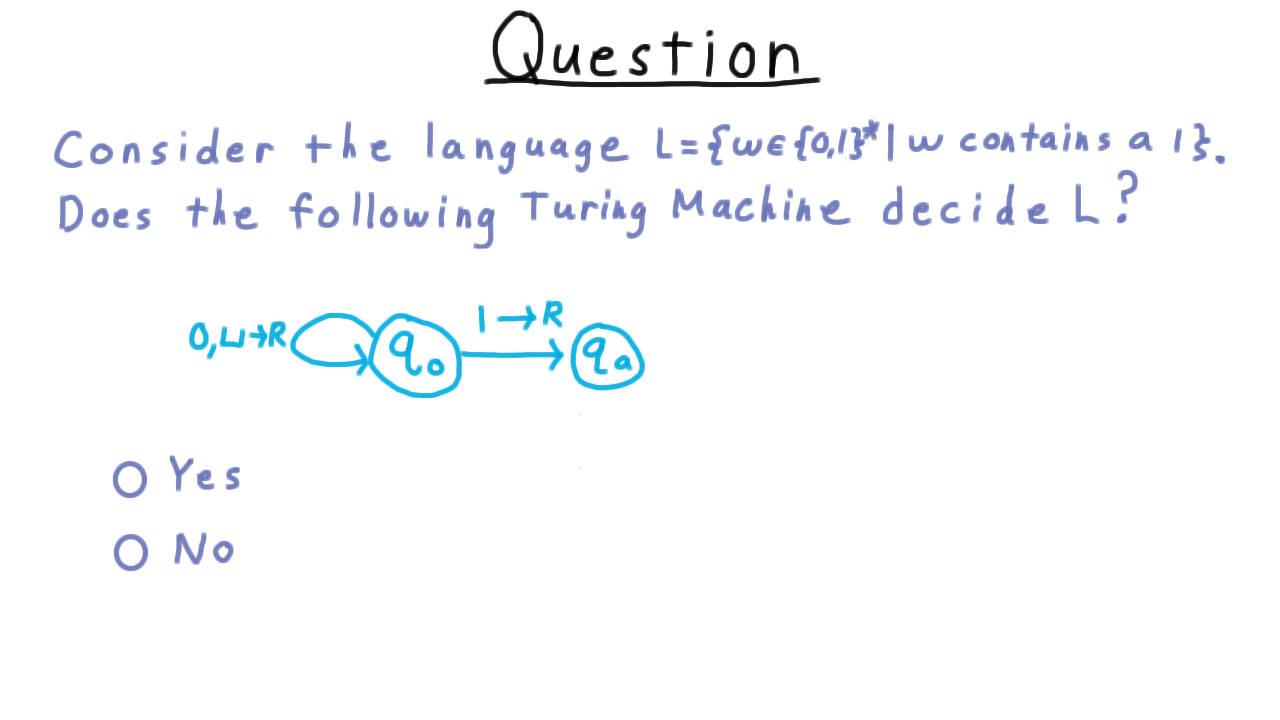

Contains a One - (Udacity)

Now for a question that is a little tricky. Consider the language that consists of all binary strings that contain a symbol 1. Does this Turing machine decide L?

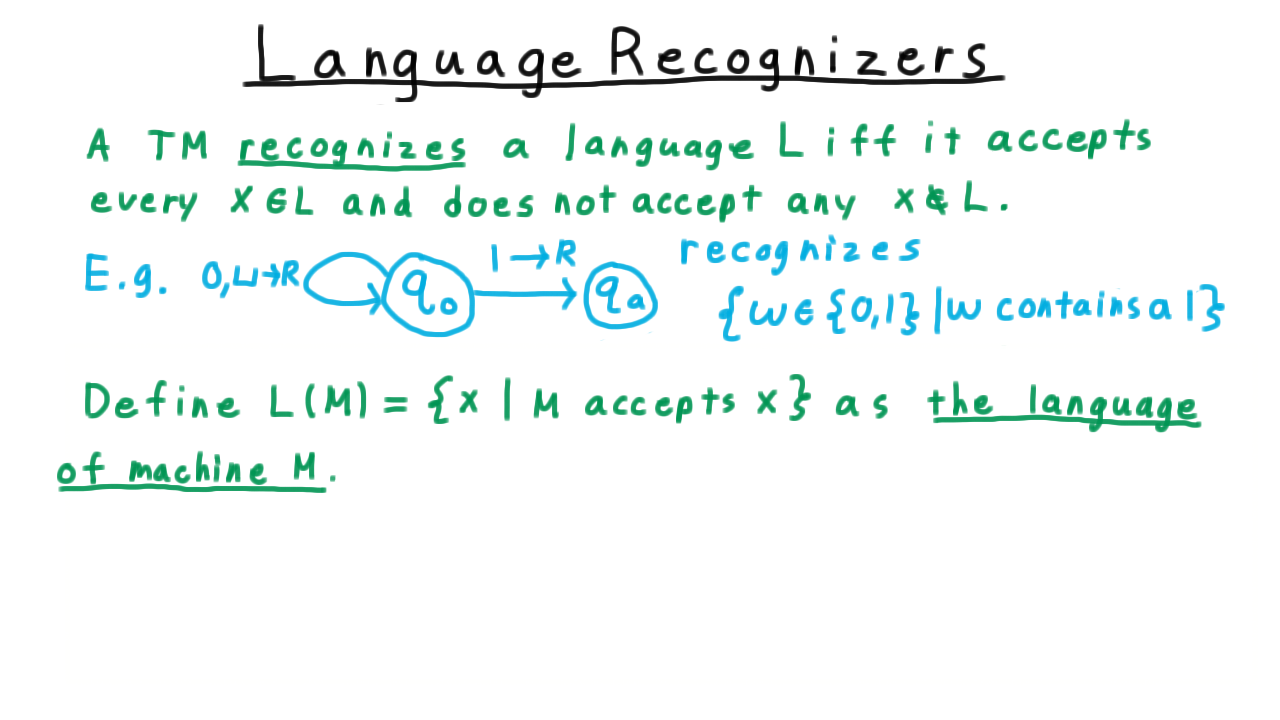

Language Recognizers - (Udacity, Youtube)

The possibility of Turing machines looping forever leads us to define the notion of a language recognizer.

We say that a Turing machine recognizes a language L iff and accepts every string in the language and does not accept every string not in the language. Thus, we can say that the Turing machine from the quiz does indeed recognize the language consisting of strings that contain a 1. It accepts those containing 1 and it doesn’t accept the others; it loops on them.

Contrast this definition with what it takes for a Turing machine to decide a language. Then it needs not only to accept everything in the language but it must reject everything else. It can’t loop like this Turing machine.

If we wanted to build a decider for this language we would need to modify the Turing machine so that it detects the end of the string and move into the reject state.

At this point, it also makes sense to define the language of a machine, which is just the language that the machine recognizes. After all, every machine recognizes some language, even if it is the empty one.

Formally, we define \(L(M)\) to be the set of strings accepted by \(M\), and we call this the language of the machine \(M\).

Conclusion - (Udacity, Youtube)

In this lesson, we examined the workings of Turing machines, and if you completed all the exercises, you should have a strong sense of how to use them to compute functions and decide languages. We’ve also seen how unlike some simpler models of computation, Turing machines don’t necessarily halt on all inputs. This forced us to distinguish between language deciders and language recognizers. Eventually, we will see how this problem of halting or not halting will be the key to understanding the limits of computation.

We’ve shown Turing machines can test equality of strings, not something that could be computed by simpler models like finite or push-down automaton that you might have seen in your undergraduate courses. But equality is a rather simple problem–can Turing machines solve the complex tasks we have our computer do. Can a Turing machines do your taxes or play a great game of chess? Next lesson, we’ll see that Turing machines can indeed solve any problem that our computers can solve and truly do capture the idea of computation.

Church-Turing Thesis - (Udacity)

Introduction - (Udacity, Youtube)

In this lecture, we will give strong evidence for the statement that

everything computable is computable by a Turing machine.

This statement is called the Church-Turing thesis, named for Alan Turing, whom we met in the previous lesson, and Alonzo Church, who had an alternative model of computation known as the lambda-calculus, which turns out to be exactly as powerful as a Turing machine. We call the Church-Turing thesis a thesis because it isn’t a statement that we can prove or disprove. In this lecture we’ll give a strong argument that our simple Turing machine can do anything today’s computers can do or anything a computer could ever do.

To convince you of the Church-Turing thesis, we’ll start from the basic Turing Machine and then branch out, showing that it is equivalent to machines as powerful as the most advanced machines today or in any conceivable future. We’ll begin by looking at multi-tape Turing machines, which in many cases are much easier to work with. And we’ll show that anything a multi-tape Turing machine can do, a regular Turing machine can do too.

Then we’ll consider the Random Access Model: a model capturing all the important capabilities of a modern computer, and we will show that it is equivalent to a multitape Turing machine. Therefore it must also be equivalent to a regular Turing machine. This means that a simple Turing machine can compute anything your Intel i7—or whatever chip you may happen to have in your computer—can.

Hopefully, by the end of the lesson, you will have understood all of these connections and you’ll be convinced that the Church-Turing thesis really is true. Formally, we can state the thesis as:

a language is “computable” if and only if it can be implemented on a Turing machine.

Simulating Machines - (Udacity, Youtube)

Before going into how single tape machines can simulate multitape ones, we will warm up with a very simple example to illustrate what is meant when we say that one machine can simulate another.

Let’s consider what I’ll call stay-put machines. These have the capability of not moving their heads in a computation step (which Turing machines, as we’ve defined them, are not allowed to do). So the transition function now includes S, which makes the head stay put. Now, this doesn’t add any additional computational capability to the machine, because I can accomplish the same things with a normal Turing machine. For every transition where the head stays put,

we can introduce a new state, and just have the head move one step right and then one step left. (Gamma here means match everything in the tape alphabet).

This puts the tape head back in the spot it started without affecting the tape’s contents. Except for occasionally taking an extra movement step, this Turing machine will operate in the same way as the stay-put machine.

More precisely, we say that two machines are equivalent

- if they accept the same inputs, reject the same inputs, and loop on the same inputs.

- considering the tape to be part of the output, equivalent machines also halt with the same tape contents.

Note that other properties of the machines (such as the number of states, the tape alphabet, or the number of steps in any given computation) do not need to be same. Just the relationship between the input and the output matters.

Multitape Turing Machines - (Udacity, Youtube)

Since having multiple tapes makes programming with Turing machines more convenient, and since it provides a nice intermediate step for getting into more complicated models, we’ll look at this Turing machine variant in detail. As shown in the figure here, each tape has its own tape head.

What the Turing machine does at each step is determined solely by its current state and the symbols under these heads. At each step, it can change the symbol under each head, and moves each head right or left, or just keeps it where it is. (With a one-tape machine, we always forced the head to move, but if we required that condition for multitape machines, the differences in tape head positions would always be even, which leads to awkwardness in programming. It’s better to allow the heads to stay put.)

Except for those differences, multitape Turing machines are the same as single-tape ones. We’ll only need to redefine the transition function. For a Turing machine with k tapes, the new transition function is

\[\delta: Q \times \Gamma^k : \rightarrow Q \times \Gamma^k \times \\{L,R,S\\}^k\]

Everything else stays the same.

Duplicate the Input - (Udacity, Youtube)

Let’s see a multitape Turing machine in action. Input always comes in on the first tape, and all the heads start at the left end of the tapes. Our task will be to duplicate this input, separated by a hash mark.

(See video for animation of the computation).

Substring Search - (Udacity)

Next, we’ll do a little exercise to practice using multitape Turing machines. Again, the point here is not so that you can put experience programming multitape Turing machines on your resume. The idea is to get you familiar with the model so that you can really convince yourself of the Church-Turing thesis and understand how Turing machines can interpret their own description in a later lesson.

With that in mind, your task is to build a two-tape TM that decides the language strings of the form x#y, where x is a substring of y. So for example, the string 101#01010 is in the language.

The second through fourth character of y match x. But on the other hand, 001#01010 is not in the language. Even though two 0s and a 1 appear in the string 01010, 001 is not a substring because the numbers are not consecutive.

Multitape SingleTape Equivalence - (Udacity, Youtube)

Now, I will argue that these enhanced multitape Turing machines have the same computational power as regular Turing machines. Multitape machines certainly don’t have less power: by ignoring all but the input tape, we obtain a regular Turing machine.

Let’s see why multitape machines don’t have any more power than regular machines.

On the left, we have a multitape Turing machine in some configuration, and on the right, we have created a corresponding configuration for a single-tape Turing machine.

On the single tape, we have the contents of the multiple tapes, with each tape’s contents separated by a hash. Also, note these dots here. We are using a trick that we haven’t used before: expanding the size of the alphabet. For every symbol in the tape alphabet of the multitape machine, we have two on the single tape machine, one that is marked by a dot and one that is unmarked. We use the marked symbols to indicate that a head of the multitape machine would be over this position on the tape.

Simulating a step of the multitape machine with the single tape version happens in two phases: one for reading and one for writing. First, the single-tape machine simulates the simultaneous reading of the heads of the multitape machines by scanning over the tape and noting which symbols have marks. That completes the first phase where we read the symbols.

Now we need to update the tape contents and head positions or markers as part of the writing phase. This is done in a second, leftward pass across the tape. (See video for an example.)

Note that it is possible that one of these strings will need to increase its length when the multitape reaches a position it hasn’t reached before. In that case, we just right-shift the tape contents to allow room for the new symbol to be written.

Once all that work is done, we return the head back to the beginning to prepare for the next pass.

So, all the information about the configuration of a multitape machine can be captured in a single tape. It shouldn’t be too hard to convince yourself that the logic of reaching and keeping track of the multiple dotted symbols and taking the right action should be as well. In fact, this would be a good for you to do on your own.

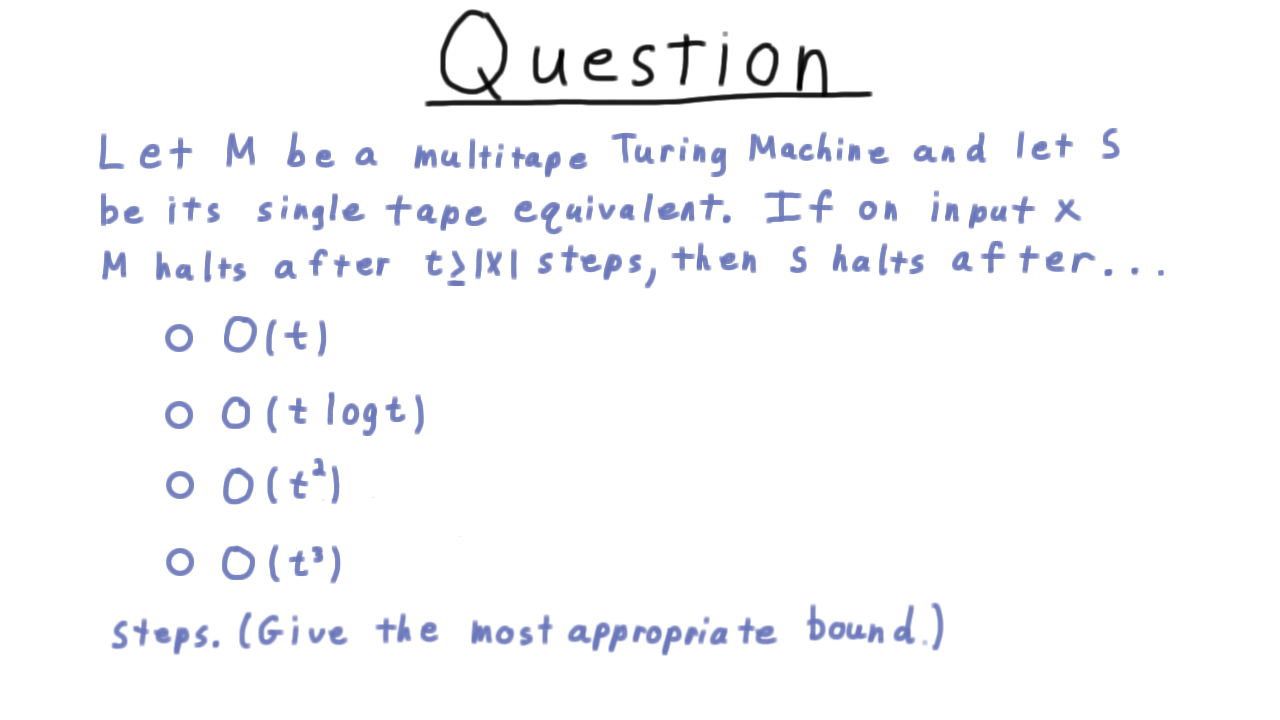

Analysis of Multitape Simulation - (Udacity)

Now for an exercise. No more programming Turing machines. Instead, I want you to try to figure out how long this simulation process takes. Let M be a multitape Turing machine and let S be its single-tape equivalent as we’ve defined it. If on input x, M halts after t steps, then S halts after… how many steps. Give the most appropriate bound. Note that we are treating the number of tapes k as a constant here.

This question isn’t easy, so just spend a few minutes thinking about it and take a guess, before watching the video answer.

RAM Model - (Udacity, Youtube)

There are other curious variants of the basic Turing machines: we can restrict them so that a symbol on a square can only be changed once, we can let them have two-way infinite tapes, or even let them be nondeterministic (we’ll examine this idea when we get to complexity). All of these things are equivalent to Turing machines in the sense we have been talking about, and it’s good to know that they are equivalent.

Ultimately, however, I doubt that the equivalence of those models does much to convince anyone that Turing machines capture the common notion of computation. To make that argument, we will show that a Turing machine is equivalent to the Random Access model, which very closely resembles the basic CPU/register/memory paradigm behind the design of modern computers.

Here is a representation of the RAM model.

Instead of operating with a finite alphabet like a Turing machine, the RAM model operates with non-negative integers, which can be arbitrarily large. It has registers, useful for storing operands for the basic operations and an infinite storage device analogous to the tape of a regular Turing machine. I’ll call this memory for obvious reasons. There are two key differences between this memory and the tape of a regular Turing machine:

- each position on this device stores a number an

- any element can be be read with a single instruction, instead of moving a head over the tape to the right spot.

In addition to this storage, the machine also contains the program itself expressed as a sequence of instructions and a special register called the program counter, which keeps track of which instruction should be executed next. Every instruction is one of a finite set that closely resembles the instructions of assembly code. For instance, we have the instruction read j, which reads the contents from the jth address on the memory and places it in register 0. Register 0, by the way, has a special status and is involved in almost every operation. We also have a write operation, which writes to the jth address in memory. For moving data between the registers, we have load, which write to \(R_0\), and store which writes from it, as well as add, which increases the number in \(R_0\) by the amount in \(R_j\). All of these operations cause the program counter to be incremented by 1 after they are finished.

To jump around the list of instructions—as one needs to do for conditionals—we have a series of jump instructions that change the program counter, sometimes depending on the value in \(R_0\).

And finally, of course, we have the halt instruction to end the program. The final value in \(R_0\) determines whether it accepts or rejects. Note that in our definition here there is no multiplication. We can achieve that through repeated addition.

We won’t have much use for the notation surrounding the RAM model, but nevertheless it’s good to write things down mathematically, as this sometimes sharpens our understanding. In this spirit, we can say that a Random Access Turing machine consists of :

- a natural number \(k\) indicating the number of registers

- and a sequence of instructions \(\Pi\).

The configuration of a Random Access machine is defined by

- the counter value, which is 0 for the halting state and indicates the next instruction to be executed otherwise.

- the register values and the values in the memory, which can be expressed as a function.

(Note that only a finite number of the addresses will contain a nonzero value, so this function always has a finite representation. We’ll use 1-based indexing, hence the domain for the tape is the natural numbers starting from one.)

Equivalence of RAM and Turing Machines - (Udacity, Youtube)

Now we are ready to argue for the equivalence of our Random Access Model and the traditional Turing machine. To translate between the symbol representation of the Turing machine and numbers of RAM, we’ll use a one-to-one correspondence E.

The blank symbol is mapped to to zero (\(E(\sqcup) = 0)so that the default value of the tape corresponds to the default value for memory.

First, we argue that a RAM can simulate a single tape Turing machine. The role of the tape played in the Turing machine will be played by the memory We'll keep track of the head position in a fixed register, say \)R_1\(. And the program and the program counter will implement the transition function. Here, I've written out in psuedocode what this might look like for the simple Turing machine shown over here, which just replaces all the ones with zeros and then halts.

Now we argue the other way: that a traditional Turing machine can simulate a RAM. Actually, we’ll create a multitape Turing machine that implements a RAM since that is a little easier to conceptualize. As we’ve seen, anything that can be done on a multitape Turing machine can be done with a single tape.

We will have one tape per register, and each tape will represent the number stored in the corresponding register.

We also have another tape that is useful for scratch work in some of the instructions that involve constants like add 55.

Then we have two tapes corresponding to the random access device. One is for input and output, and the other is for simulating the contents of the memory device during execution. Storing the contents of the random access device is the more interesting part. This is done just by concatenating the (index, values) pairs using some standard syntax like parentheses and commas.

The program of the RAM must be simulated by the state transitions of the Turing machine. This can be accomplished by having a subroutine or sub-Turing machine for each instruction in the program. The most interesting of these instructions are the ones involving memory. We simulate those by searching the tape that stores the contents of the RAM for one of these pairs that has the proper index and then reading or writing the value as appropriate. If no such pair is found, then the value on the memory device must be zero.

After the work of the instruction is completed, the effect of incrementing the program counter is achieved by transitioning to the state corresponding to the start of the next instruction. That is, unless the instruction was a jump, in which case that transition is effected. Once the halt instruction is executed the contents of the tape simulating the random access device are copied out onto the I/O tape.

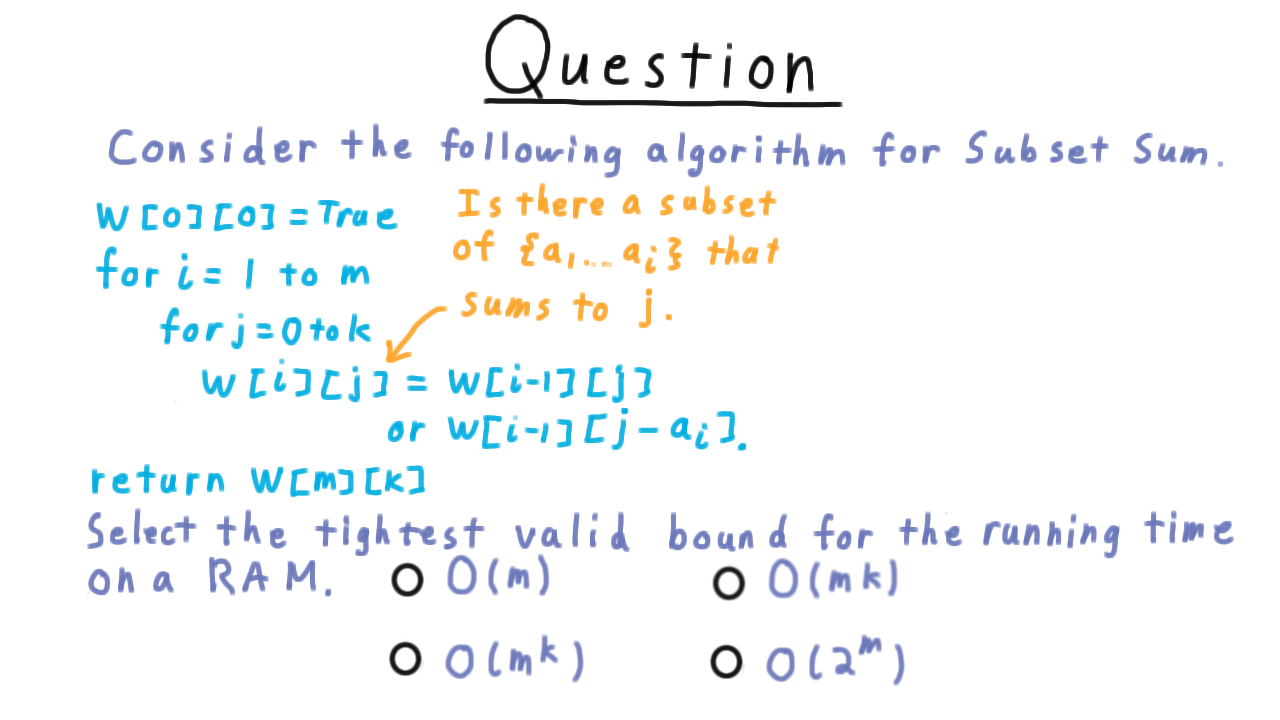

RAM Simulation Running Time - (Udacity)

Given this description about how a traditional Turing machine can simulate a Random Access Model, I want you to think about how long this simulation takes. Let R be a random access machine and let M be its multi-tape equivalent. We’ll let n be the length of the binary encoding of the input to R and let t be the number of steps taken by R. Then M takes how long to simulate R?

This is a tough question, so I’ll give you a hint: the length of the representation of a number increases by at most a constant in each step of the random access machine R.

Conclusion - (Udacity, Youtube)

Once you know that a Turing machine can simulate a RAM, then you know it can simulate a standard CPU. Once you can simulate a CPU, you can simulate any interpreter or compiler and thus any programming language. So anything you can run on your desktop computer can be simulated by a Turing machine.

What about multi-core, cloud computing, probabilistic, quantum and DNA computing? We won’t do it here, but you can prove Turing machines can simulate all those models as well. The Church-Turing thesis has truly stood the test of time. Models of computation have come and go but none have been any match for the Turing machine.

Why should we care about the Church-Turing thesis? Because there are problems that Turing machines can’t solve. We argued this with counting arguments in the first lecture and will give specific examples in future lectures. If these problems can’t be solved by Turing machines they can’t be solved by any other computing device.

To help us describe specific problems that one cannot compute, in the next lecture we discuss two of Turing’s critical insights: that a computer program can be viewed as data—as part of the input to another program—and that one can have a universal Turing machine that can simulate the code of any other computer: one machine to rule them all.

Universality - (Udacity)

Introduction - (Udacity, Youtube)

In 1936, when Alan Turing wrote his famous paper “On Computable Numbers”, he not only created the Turing machine but had a number of other major insights on the nature of computation. Turing realized that the computer program itself could also be considered as part of the input. There really is no difference between the program and data. We all take that for a given today: we create computer code in a file that gets stored on the computer no differently than any other type of file. And data files often have computer instructions embedded in them. Even modern computer fonts are basically small programs that generate readable characters at arbitrary sizes.

Once he took the view of program code as data, Turing had the beautiful idea that a Turing machine could simulate that code. There is some fixed Turing machine, a universal Turing machine, that can simulate the code of any other Turing machine. Again, this is an idea that we take for granted today, as we have interpreters and compilers that can run the code of any programming language. But, back in Turing’s day, this idea of a universal machine was the major breakthrough that allowed Turing, and will allow us, to develop problems that Turing machines or any other computer cannot solve.

Encoding a Turing Machine - (Udacity, Youtube)

Before we can simulate or interpret a Turing machine, we first have to represent it using a string. Notice that this presents an immediate challenge: our universal Turing machine must use a fixed alphabet for its input and have a fixed number of states, but it must be able to simulate other Turing machines with arbitrarily large alphabets and an arbitrary numbers of states. As we’ll see, one solution is essentially to enumerate all the symbols and states and represent them in binary. There are lots of ways to do this: the way we’re going to do it is a compromise of readability and efficiency.

Let \(M\) be a Turing machine with states \(Q = \{q_0,\ldots q_{n-1}\}\) and tape alphabet \(\Gamma = \{a_1, \ldots a_m \}\). Define \(i\) and \(j\) so that \(2^i\) is at least the number of states and \(2^j\) is at least the number of tape symbols. Then we can encode a state \(q_k\) as the concatenation of the symbol q with the string w, where w is the binary representation of \(k\). For example, if there are 6 states, then we need three bits to encode all the states. The state \(q_3\) would be encoded as the string q011. By convention, we make

- \(q_0\) the initial state,

- \(q_1\) the accept state,

- \(q_2\) the reject state.

We use an analogous strategy for the symbols, encoding \(a_k\) as the symbol a followed by the string w, where w is the binary representation of k.

For example, if there are 10 symbols, then we need four bits to represent them all. If \(a_5\) is the star symbol, we would be encode that symbol as a0101.

Let’s see an encoding for an example.

This example decides whether the input consists of a number of zeros that is a power of two. To encode the Turing machine as a whole we really just need to encode its transition function. We’ll start by encoding the black edge from the diagram. We are going from state zero, seeing the symbol zero, and we go to state three, we write symbol 0 and we move the head to the right.

Remember that the order here is input state, input symbol. Then output state, output symbol, and finally direction.

Encoding Quiz - (Udacity)

Now, I’m not going to write out all the rest of the transitions, but I think it would be a good idea for you to do one more. So use this red box here to encode this red transition.

Building a Universal Turing Machine - (Udacity, Youtube)

Now, we are ready to describe how to build this all-powerful universal Turing machine. As input to the universal machine, we will give the encoding of the input and the encoding of M, separated by a hash. We write this as

The goal is to similate M’s execution when given the input w, halting in an accept or reject state, or not halting at all, and ultimately outputing the encoding of the output of M on w when M does halt. We’ll describe a 3-tape Turing machine that achieves this goal of simulating M on w.

The input comes in on the first tape. First, we’ll copy the description of the machine to the second tape and copy the initial state to the third tape. For example, the tape contents might end up like this.

The we rewind all the heads and begin the second phase.

Here, we search for the appropriate tuple in the description of the machine. The first element has to match the current state stored on tape three, and the symbol part has to match the encoding on the tape 1. If no match is found, then we halt the simulation and put the universal machine in an accepting or rejecting state according to the current state of the machine being interpreted. If there is a match, however, then we apply the changes to the first tape and repeat.

Actually, interpreting a Turing machine description is surprisingly easy.

We’ve just seen how Turing machines are indeed reprogrammable just like real-world computers. This lends further support to the Church-Turing thesis, but it also has significance beyond that. Since the input to a Turing machine can be interpreted as a Turing machine, this suggest that programs are a type of data. But arbitrary data can also be interpretted as a (possibly invalid) Turing machine. So is there any difference between data and program? Perhaps, we can leave this question for the philosophers.

Abstraction - (Udacity, Youtube)

At this point, the character of our discussion of computability is going to change signficantly. We’ve established the key properties of Turing machines: that they can do anything we mean by computation and that we can pass a description of a Turing machine to a Turing machine as input for it to simulate. With these points established, we won’t need to talk about the specifics of Turing machines much anymore. There will be little about tapes or states, transitions or head positions. Instead, we will think about computation at a very high level, trusting that if we really had to, we could write out the Turing machine to do it. If we need to write out code, we will do so only in pseudocode or with pictures.

What is there left to talk about? Well, remember from the very first lesson that we argued that not all functions are computable, or as we later said, not all languages can be decided by Turing machines. We’re now in a good position to talk about some of these undecidable languages. We had to wait until we established the universality of Turing machines because these languages are going to consist of strings that encode Turing machines. The rest of the lesson will review the definitions of recognizability and decidability, and then we’ll talk about the positive side of the story: the languages about Turing machines that we CAN decide or recognize. As we’ll see, there are plenty that we can’t.

Language Recognizers - (Udacity, Youtube)

Recall that a Turing machine recognizes a language if it accepts every string in the language and does not accept anything that is not in the language. It could either reject or loop. This Turing machine here recognized the language of binary strings containing a 1, but it looped on those that don’t contain a 1.

In order to decide a language, the Turing machine must not only accept every string in the language but it must also explicitly reject every string that is not in the language. This machine achieves that by not looping on the blanks.

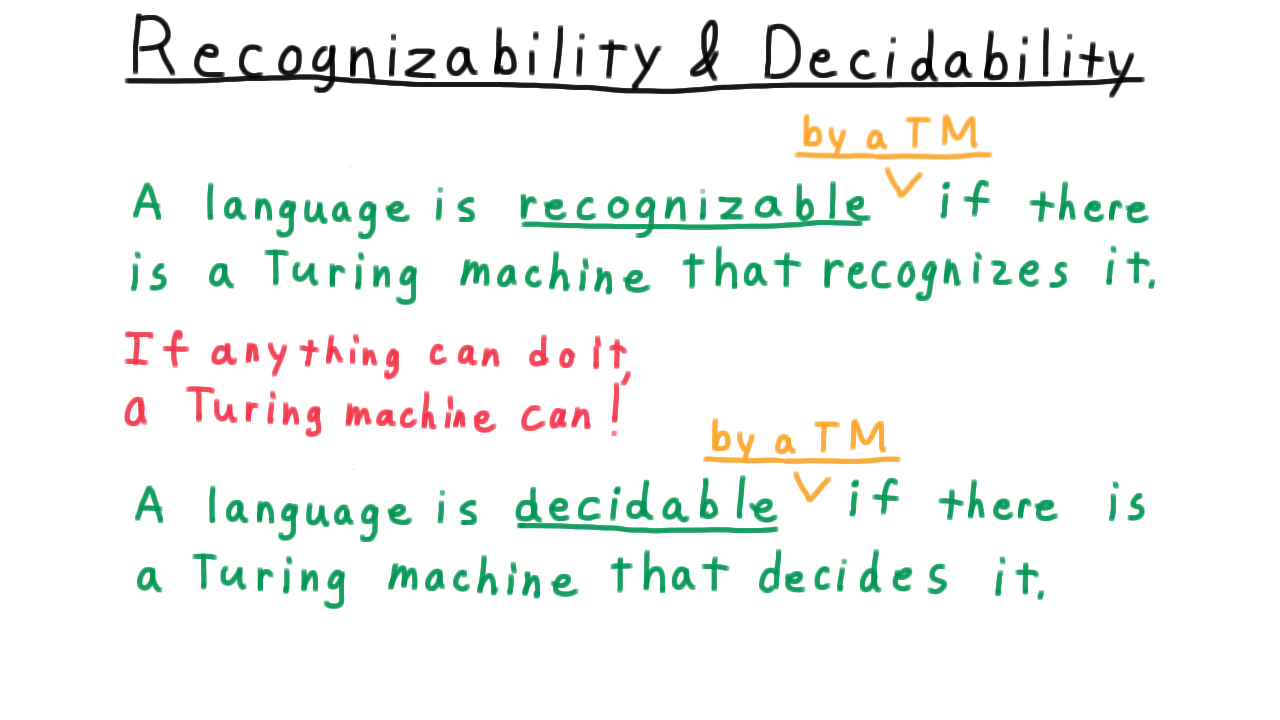

Recognizability and Decidability - (Udacity, Youtube)

Ultimately, however, we are not interested in whether a particular Turing machine recognizes or decides a language; rather we are interested in whether there is a Turing machine that can recognize or decides the language.

Therefore, we say that a language is recognizable if there is a Turing machine that recognizes it, and we say that a language is decidable if there is a Turing machine that decides it.

A language is recognizable if there is a Turing machine that recognizes it.

A language is decidable if there is a Turing machine that decides it.

Now, looking at this someone might object, “Shouldn’t we say ‘recognizable by a Turing machine’ and ‘decidable by a Turing machine’?” Of course, we could and the statements would still be true. But we don’t, the reason being that we strongly believe that if anything can do it a Turing machine can! That’s the Church-Turing thesis.

In an absolute sense, we believe that a language is only recognizable by anything if a Turing machine can recognize it and a language is only decidable by anything if a Turing machine can decide it, and we use terms that reflect that belief.

Now other terms are sometimes used instead of recognizable and decidable. Some say that Turing machines compute languages, so to go along with that they say that languages are computable if they there is a Turing machine that computes it. Another equivalent term for decidable is recursive. Mathematicians often prefer this word.

And those who use that term will refer to recognizable languages as recursively enumerable. Some also call these languages Turing-acceptable and semi or partially decidable.

We should also make clear the relationship between these two terms. Clearly, if a language is decidable, then it is also recognizable; the same Turing machine works for both. It feels like it should also be true that if a language is recognizable and its complement is also recognizable, then the language is decideable. This is true, but there is a potential pitfall here that we need to make sure to avoid.

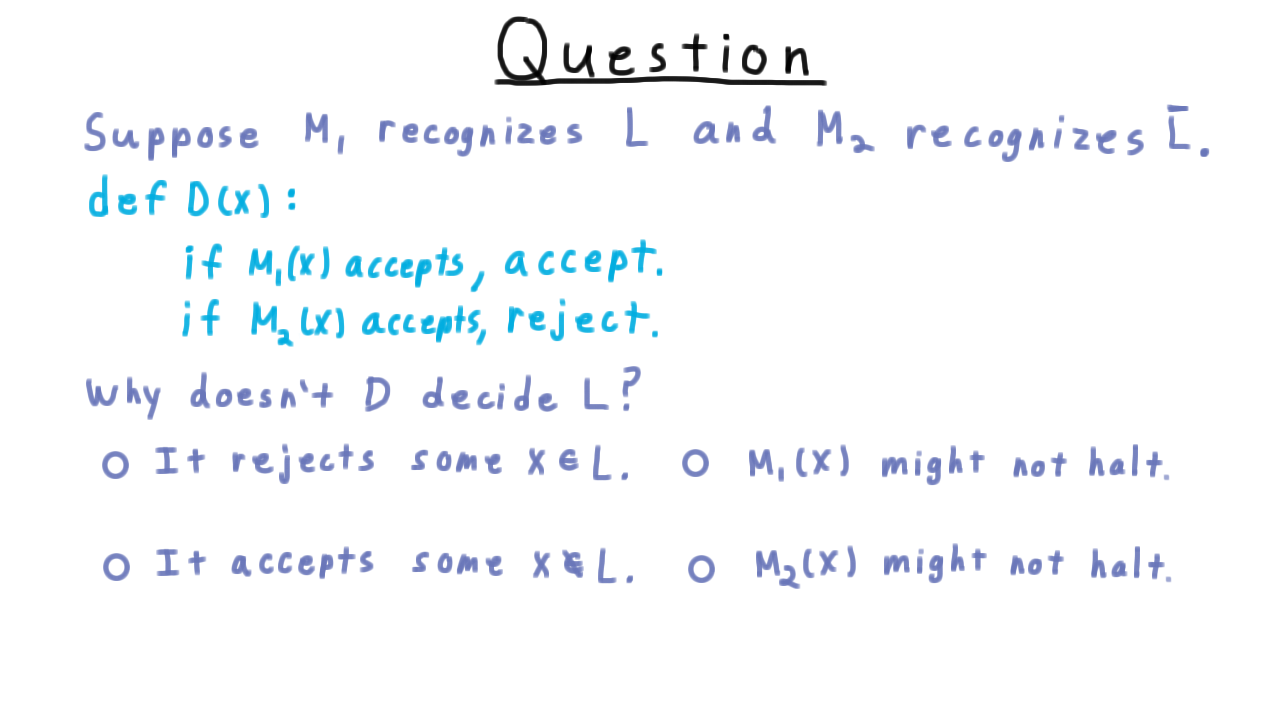

Decidability Exercise - (Udacity)

Suppose that we are given one machine \(M_1\) that recognizes a language \(L\) and another \(M_2\) that recognizes \(\overline\{L\}\). If we were to ask your average programmer to use these machines to decide the language, his first guess might go something like this.

This program will not decide L, however, and I want you to tell me why. Check the best answer.

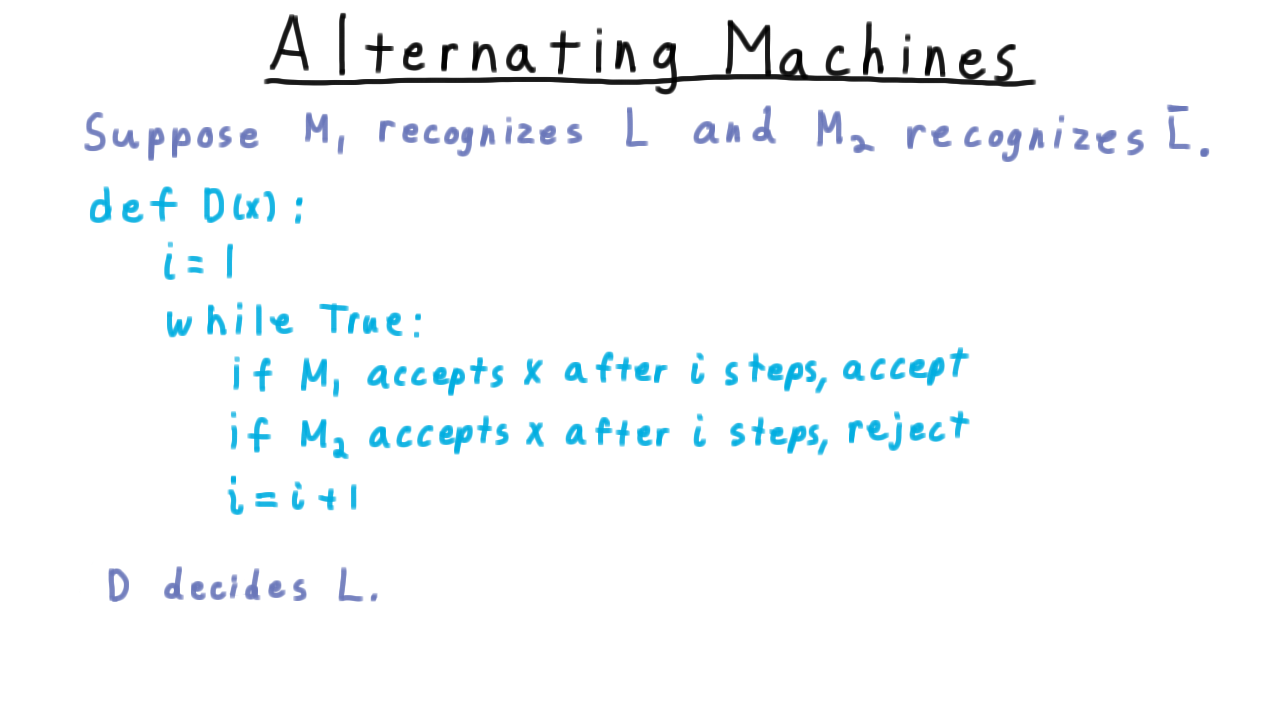

Alternating Machines - (Udacity, Youtube)

Here is the alternating trick in some more detail. Suppose that \(M_1\) recognizes a language and \(M_2\) recognizes its complement. We want to decide the language.

In psuedocode, the alternating strategy might look like this.

In every step, we execute both machines one more step than in the previous iteration. Note that it doesn’t matter if we save the machines configuration and start where we left off or start over. The question is whether we get the right answer, not how fast. The string has to be either in \(L\) or in \(\overline\{L\}\), so one of these has to halt after some finite number of steps, and when \(i\) hits that value, this program will give the right answer.

Overall then, we have the following theorem.

A language L is decidable if and only if L and its complement are both recognizable.

Counting States - (Udacity)

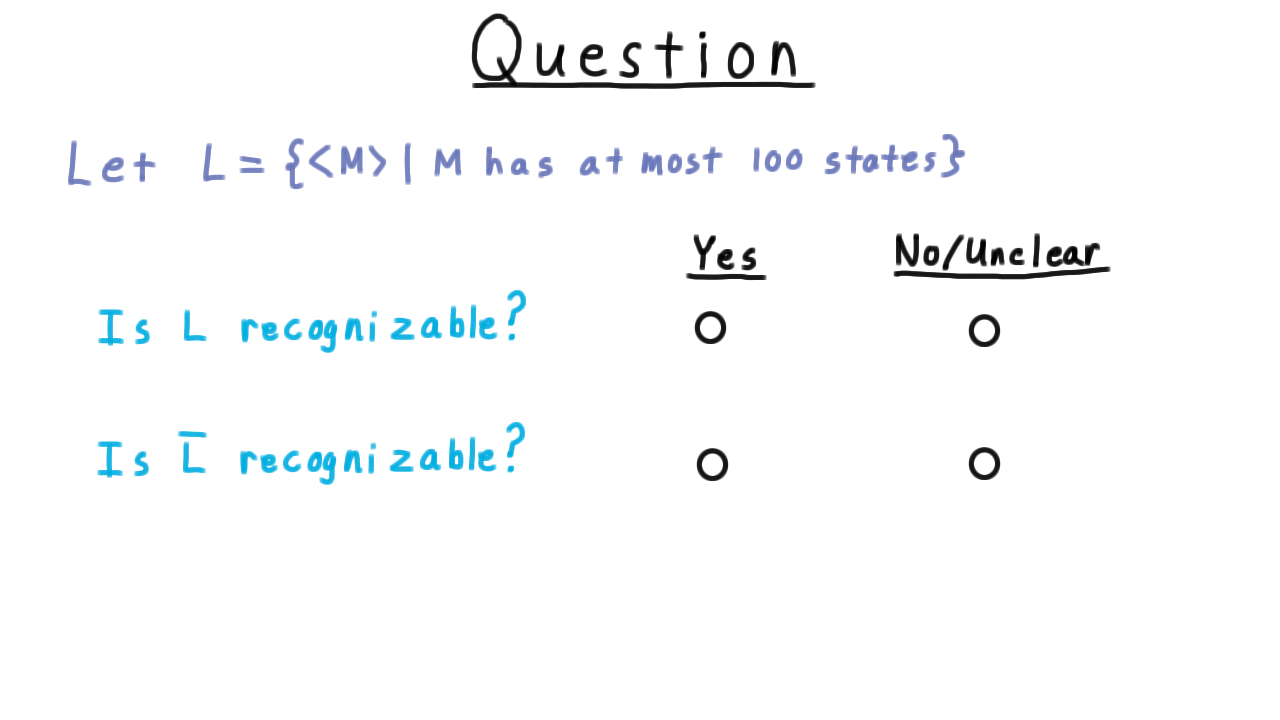

Now we’re going to go through a series of languages and try to figure out if they and their complements are recognizable. First, let’s examine the set of strings that describe a Turing machine that has at most 100 states. You can assume the particular encoding for Turing machines that we used, but any encoding will serve the same purpose.

Indicate whether you think that L is recognizable and whether L complement is recognizable. We don’t have a way proving that a language is not recognizable yet, so I’ve labeled the “No” option as unclear.

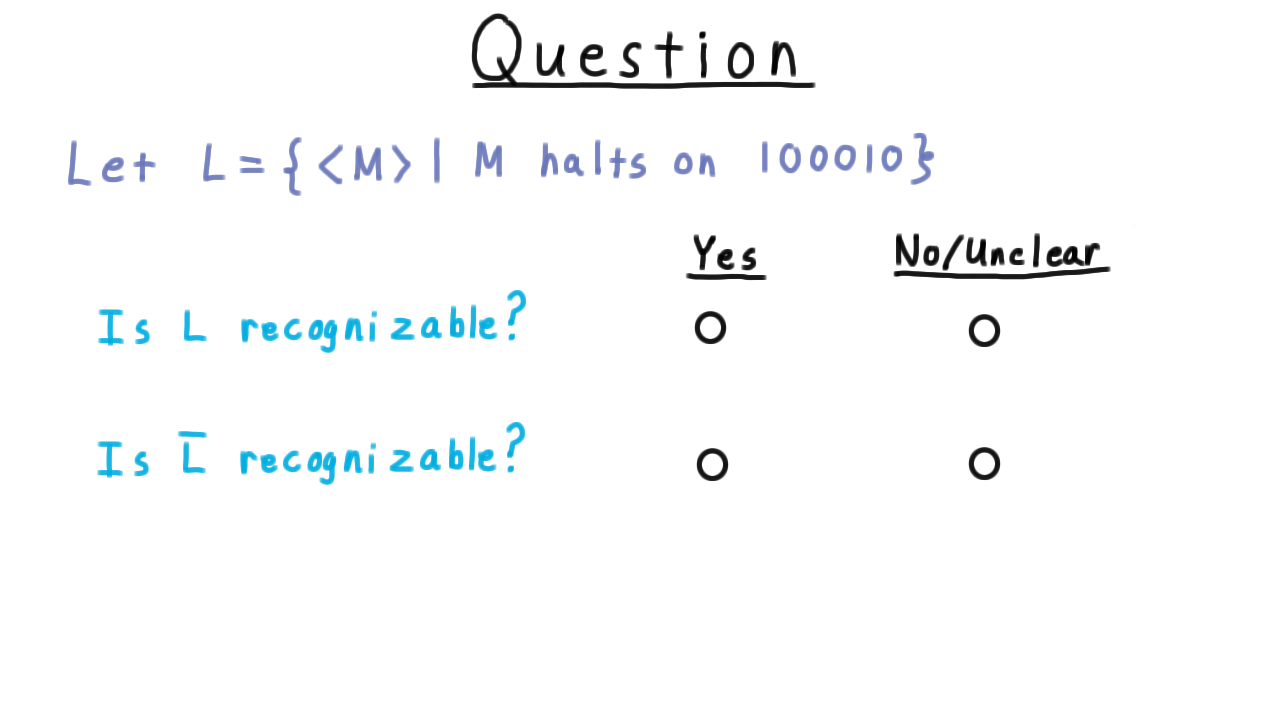

Halting on 34 - (Udacity)

Next, we consider the set of Turing machines that halt on the number 34 written in binary. Indicate whether L and L complement are recognizable.

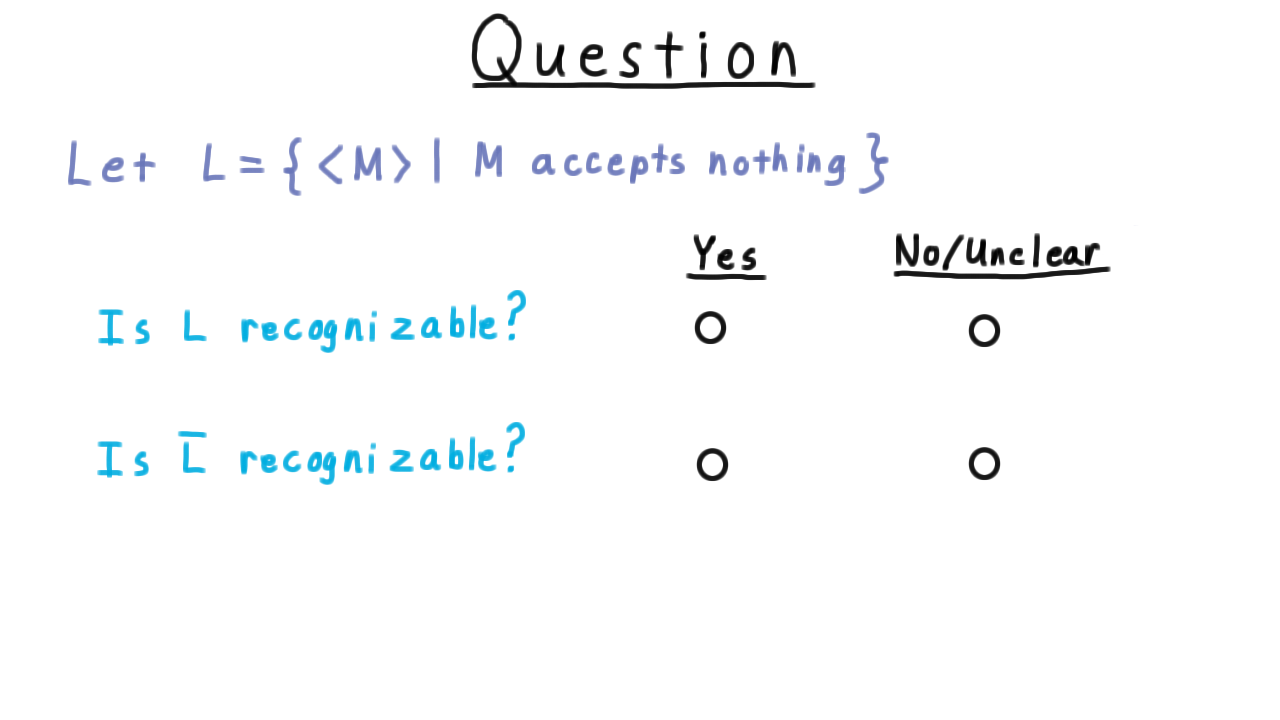

Accepting Nothing - (Udacity)

Let’s consider another language, this time the set of Turing machine descriptions where the Turing machine accepts nothing.

Tell me: are either L or L complement recognizable?

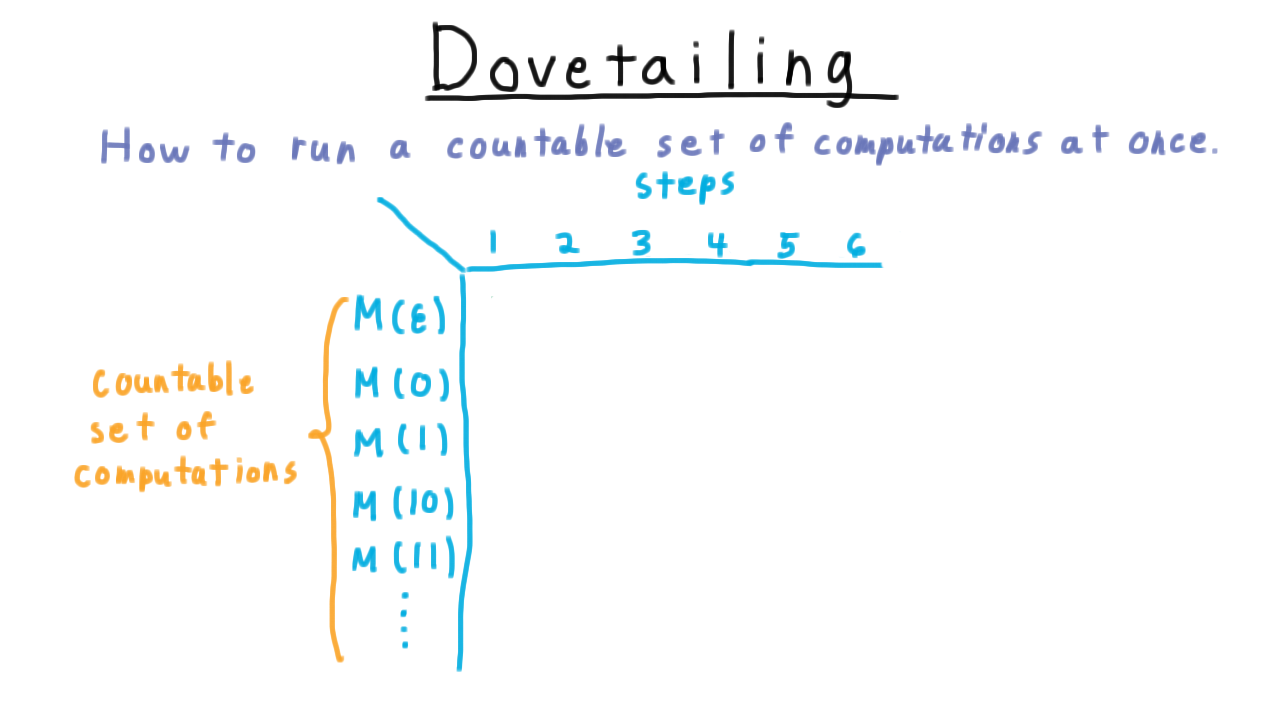

Dovetailing - (Udacity, Youtube)

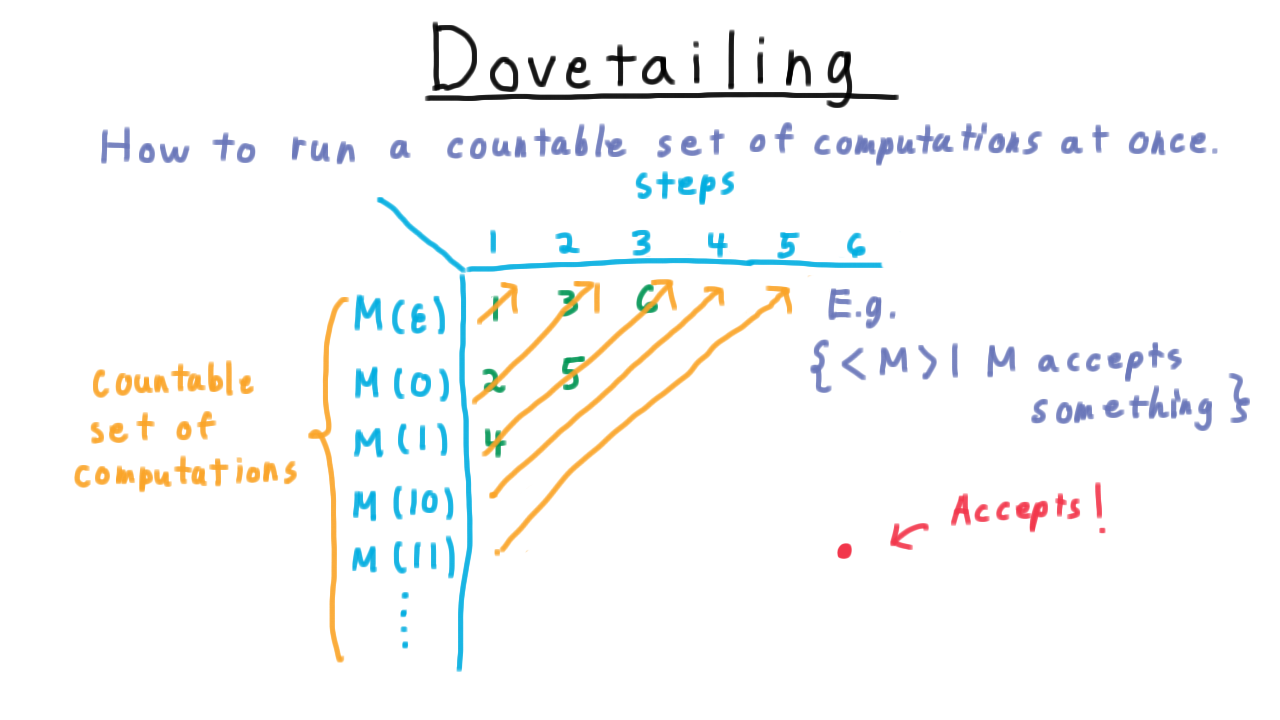

Here is the dovetailing trick, which let’s you run a countable set of computations all at once. We’ll illustrate the technique for the case where we are simulating a machine M on all binary strings with this table here.

Every row in the table corresponds to a computation or the sequence of configurations the machine goes through for the given input. Simulating all of these computation means hitting every entry in this table. Note that we can’t just simulate M on the empty string first or we might just keep going forever, filling out the first row and never getting to the second. This is the same problem that we encountered when trying to show that a countable union of countable sets is countable, or that the set of rational numbers is countable.

And the solution is the same too. We go diagonal by diagonal, first simulating the first computation for one step. Then the second computation for one step and the first computation for two steps, etc. Eventually every configuration in the table is reached.

Thus, if we are trying to recognize the language of Turing machine descriptions where the Turing machine accepts something, then a Turing machine in the language must accept some string after finite number of steps.

This will correspond to some some entry in the table, so we eventually reach it and accept.

Always Halting - (Udacity)

Let’s consider one last language, the set of descriptions of Turing machines that halt on every input. Think carefully, and indicate whether you think that either L or L complement is recognizable.

Conclusion - (Udacity, Youtube)

In this and the previous lessons, we’ve developed a set of ideas and definitions that sets the stage for understanding what we can and cannot solve on a computer.

- We’ve seen the Turing machine, an amazingly simple model that can only move a tape back and forth while reading and writing on that tape.

- We’ve seen that despite it’s simplicity this model captures the full power of computers now and forever.

- We’ve seen how to consider Turing machine programs as data themselves and create a universal Turing machine that can simulate those programs.

- And at the end of this lesson, we saw some languages defined using program as data that don’t seem to be easily decidable.

In the next lecture we will show how to prove many languages cannot be solved by a Turing machine, including the most famous one, the halting problem.

Undecidability - (Udacity)

Introduction - (Udacity, Youtube)

As a computer scientist, you have almost surely written a computer program that just sits there spinning its wheels when you run it. You don’t know whether the program is just taking a long time or if you made some mistake in the code and the program is in an infinite loop. You might have wondered why nobody put a check in the compiler that would test your code to see whether it would stop or loop forever. The compiler doesn’t have such a check because it can’t be done. It’s not that the programmers are not smart enough, or the computers not fast enough: it is just simply impossible to check arbitrary computer code to determine whether or not it will halt. The best you can do is simulate the program to know when it halts, but if it doesn’t halt, you can never be sure if it won’t halt in the future.

In this lesson we’ll prove this amazing fact and beyond, not only can you not tell whether a computer halts, but you can’t determine virtually anything about the output of a computer. We build up to these results starting with a tool we’ve seen from our first lecture, diagonalization.

Diagonalization - (Udacity, Youtube)

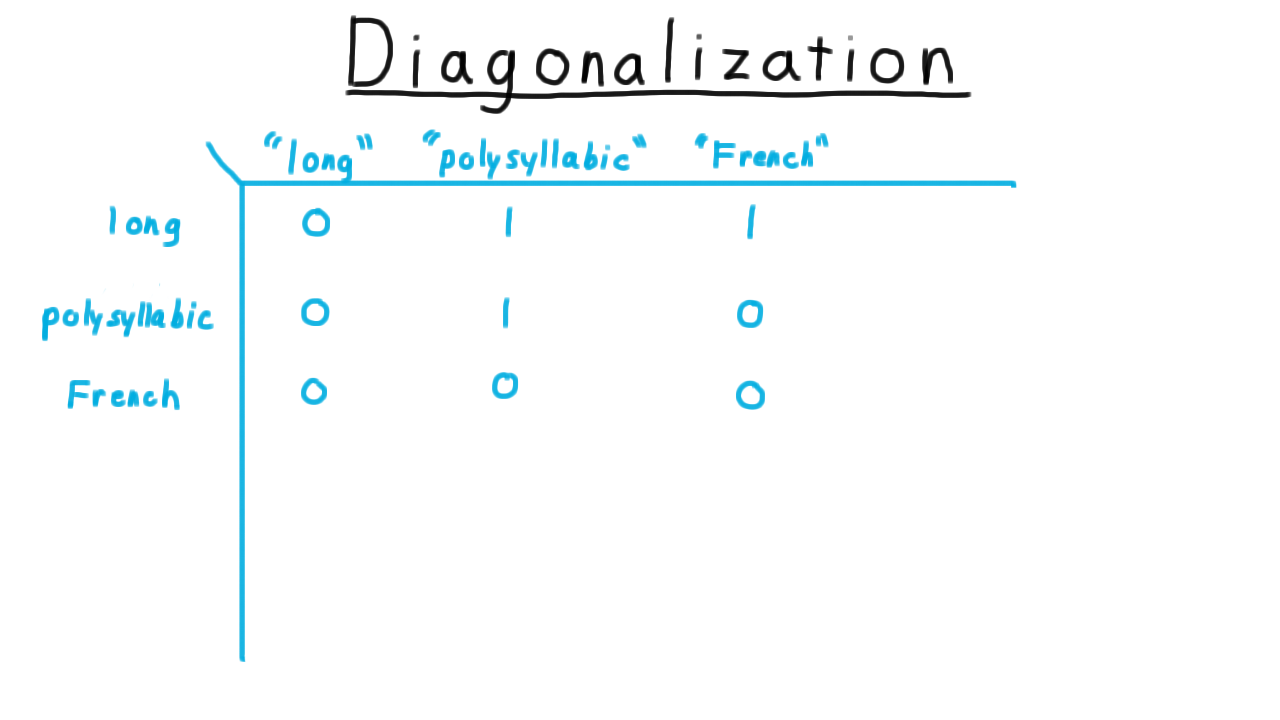

The diagonalization argument comes up in many contexts and is very useful for generating paradoxes and mathematical contradictions. To show how general the technique is, let’s examine it in the context of English adjectives.

Here I’ve created a table with English adjectives both as the rows and as the columns. Consider the row to be the word itself and the column to be the string representation of the word. For each entry, I’ve written a 1 if the row adjective applies to the column representation of the word. For instance, “long” is not a long word, so I’ve written a 0. “Polysyllabic” is a long word, so I’ve written a 1. “French” is not a French word, it’s an English word, so I’ve written a 0. And so forth.

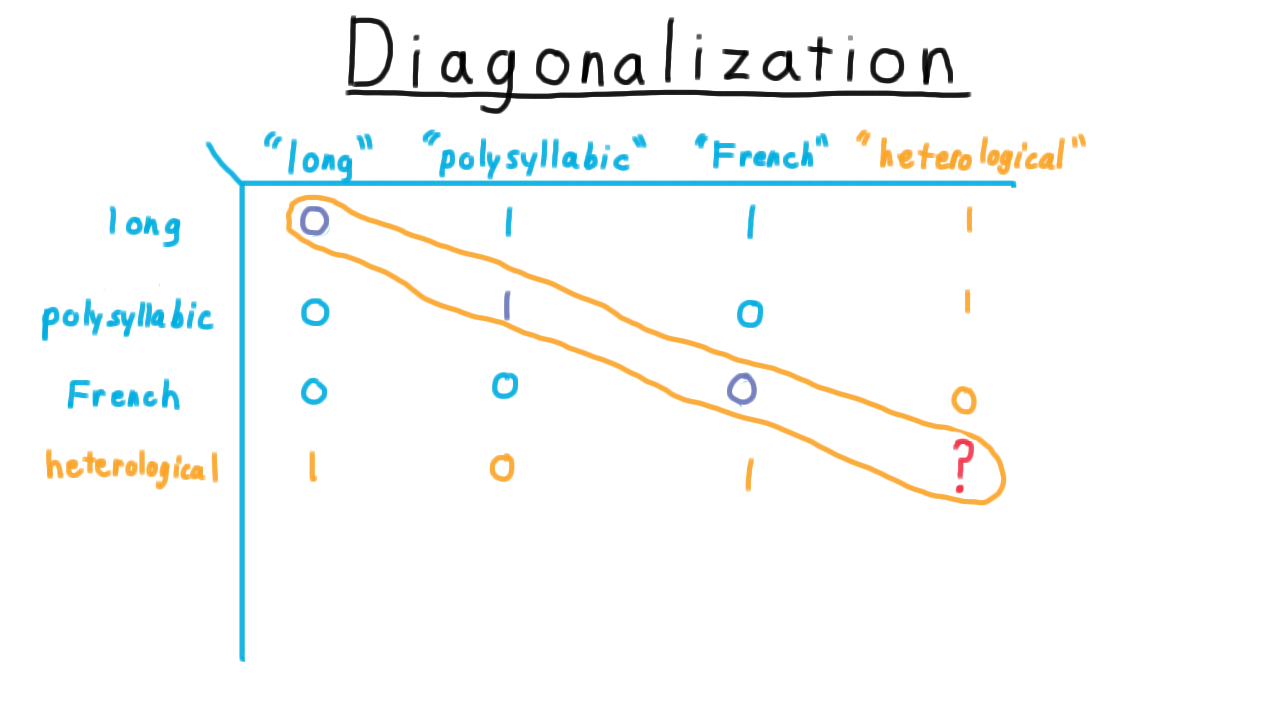

So far, we haven’t run into any problems. Now, let’s make the following definition: a heterological word is a word that expresses a property that its representation does not possess. We can add the representation to the table without any problems. It is a long, polysyllabic, non-French word. But when we try to add the meaning to the table, we run into problems. Remember: a heterological word is one that express a property that its representation does not posseses. “Long” is not a long word, so it is heterological. “Polysyllabic” is a polysyllabic word, so it is not heterological, and “French” is not a French word, so it is heterological.

What about “heterological,” however? If we say that it is heterological (causing us to put a 1 here), then it applies to itself and so it can’t be heterological. On the other hand, if we say it is not heterological (causing us to put a zero here), then it doesn’t apply to itself and it is heterological. So there really is no satisfactory answer here. Heterological is not well-defined as an adjective.

For English adjectives, we tend to simply tolerate the paradox and politely say that we can’t answer that question. Even in mathematics the polite response was simply to ignore such questions until around the turn of the 20th century when philosophers began to look for a more solid logical foundations for reasoning and for mathematics in particular.

Naively, one might think that a set could be an arbitrary collection. But what about the set of all sets that do not contain themselves? Is this set a member of itself or not? This paradox posed by Bertrand Russell wasn’t satisfactorily resolved until the 1920s with the formulation of what we now call Zermelo-Fraenkel set theory.

Or from mathematical logic, consider the statement, “This statement is false.” If this statement is true, then it says that it is false. And if this statement is false, then it says so and should be true. It turns out that falsehood in this sense isn’t well-defined mathematically.

At this point, you’ve probably guessed where this is going for this course. We are going to apply the diagonalization trick to Turing machines.

An Undecidable Language - (Udacity, Youtube)

Here is the diagonalization trick applied to Turing machines. We’ll let \(M_1, M_2, \ldots \), be the set of all Turing machines. Turing machines can be described with strings, so there are a countable number of them and therefore such an enumeration is possible. We’ll create a table as before. I’ll define the function

\[ f(i,j) = \left\{ \begin{array}{ll} 1 & \mathrm{if} \; M_i \; \mathrm{accepts }\; < M_j > \\\\ 0 & \mathrm{otherwise} \end{array}\right. \]

For this example, I’ll fill out the table in some arbitrary way. The actual values aren’t important right now.

Now consider the language L, consisting of string descriptions of machines that do not accept their own descriptions. I.e.

\[ L = \{ < M > | < M > \notin L(M) \}. \]

Let’s add a Turing machine \(M_L\) that recognizes this language to the grid.

Again we run into a problem. The row corresponding to \(M_L\) is supposed to have the opposite values of what is on the diagonal. But what about the diagonal element of this row? What does the machine do when it is given its own description? If it accepts itself, then \(< M_L >\) is not in the language \(L\), so \(M_L\) should have accepted itself. On the other hand, if \(M_L\) does not accept its string representation, then \(< M_L >\) is in the language \(L\), so \(M_L\) should have accepted its string representation!

Thankfully, in computability, the resolution to this paradox isn’t as hard to see as in set theory or mathematical logic. We just conclude that the supposed machine \(M_L\) that recognizes the language L doesn’t exist.

Here is natural to object: “Of course, it exists. I just run M on itself and if it doesn’t accept, we accept.” The problem is that M on itself might loop, or it just might run for a very long time. There is no way to tell the difference.

The end result, then, is that the language L of string descriptions of machines that do not accept their own descriptions is not recognizable.

Recall that in order for a language to be decidable, both the language and its complement have to be recognizable. Since L is not recognizable, it is not decidable, and neither is it’s complement, the language where the machine does accept its own description. We’ll call this D_TM, D standing for diagonal,

\[D_{TM} = \{ < M > | < M > \in L(M)\} \]

These facts are the foundation for everything that we will argue in this lesson, so please make sure that you understand these claims.

Dumaflaches - (Udacity)

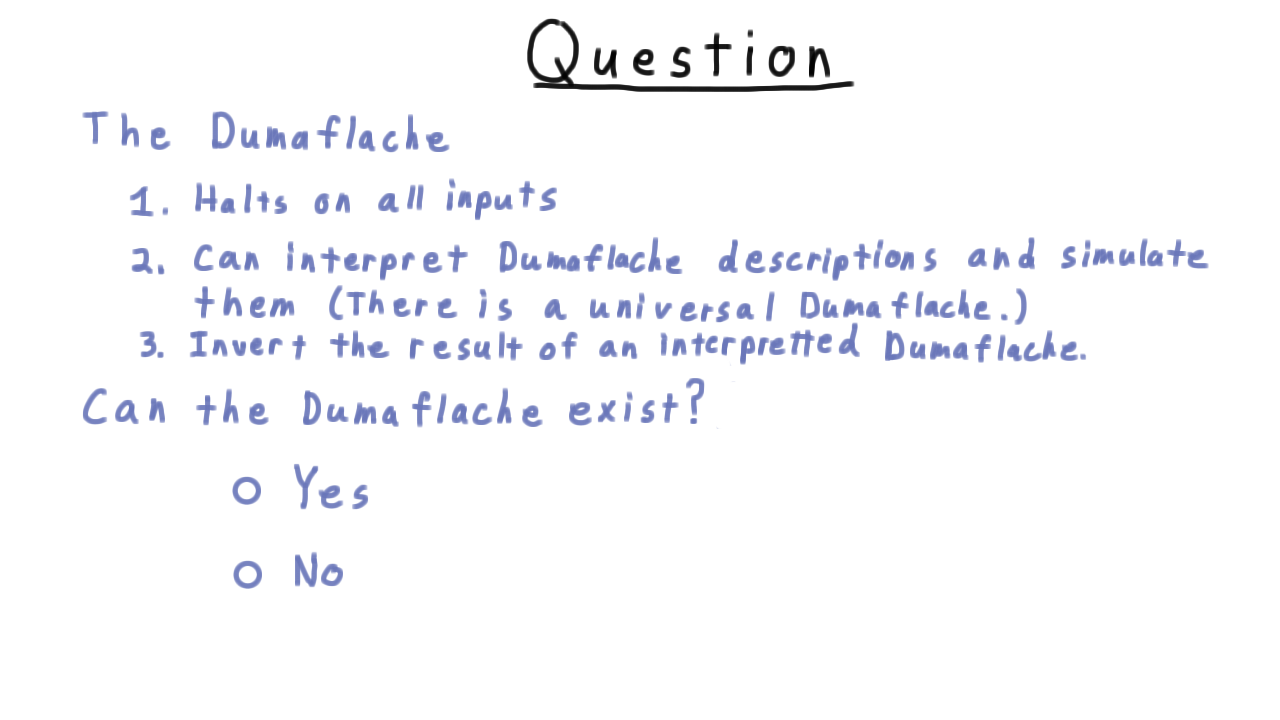

If you think back to the diagonalization of Turing machines, you will notice that we hardly referred to the properties of Turing machines at all. In fact, except at the end, we might as well have been talking about a different model of computation, say the dumaflache. Perhaps, unlike Turing machines, dumaflaches halt on every input. These models exist. A model that allowed one step for each input symbol would satisfy this requirement.

How do we resolve the paradox then? Can’t we just build a dumaflache that takes the description of a dumaflache as input and then runs it on itself? It has to halt, so we can reject it if accepts and accept if it rejects, achieving the needed inversion. What’s the problem? Take a minute to think about it.

Mapping Reductions - (Udacity, Youtube)

So far, we have only proven that one language is unrecognizable. One technique for finding more is mapping reduction, where we turn an instance of one problem into an instance of another.

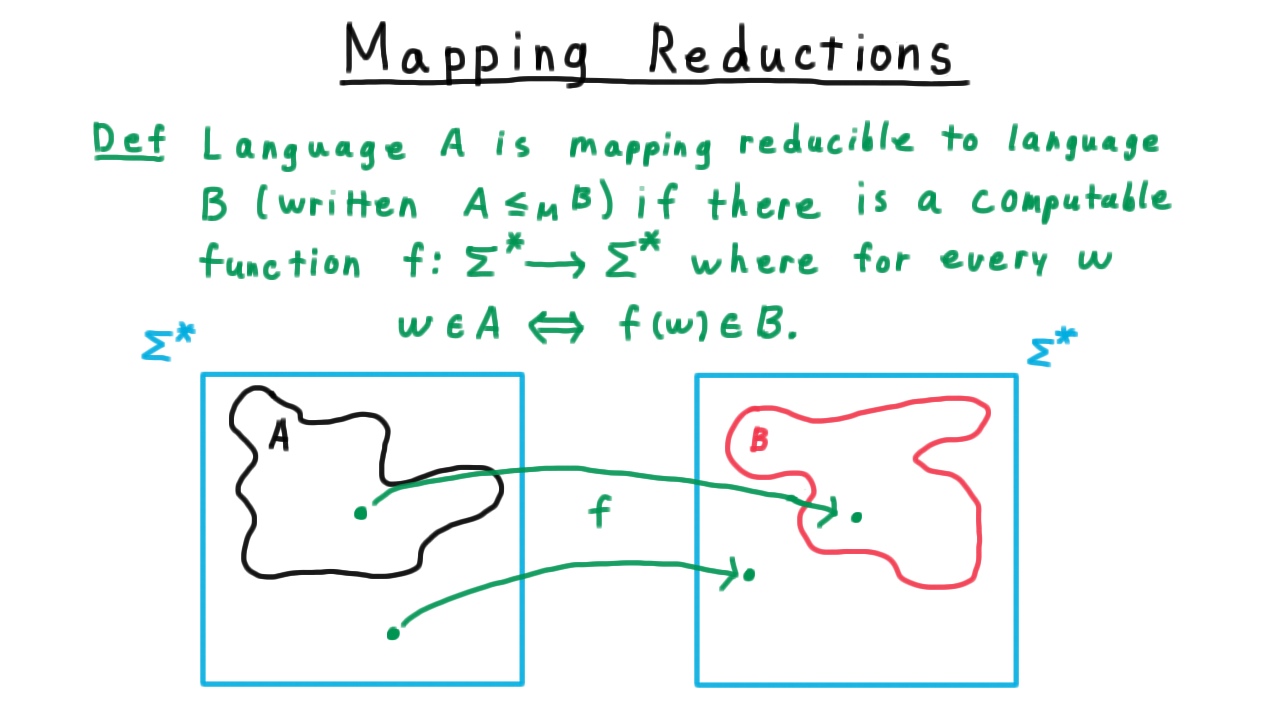

Formally, we say

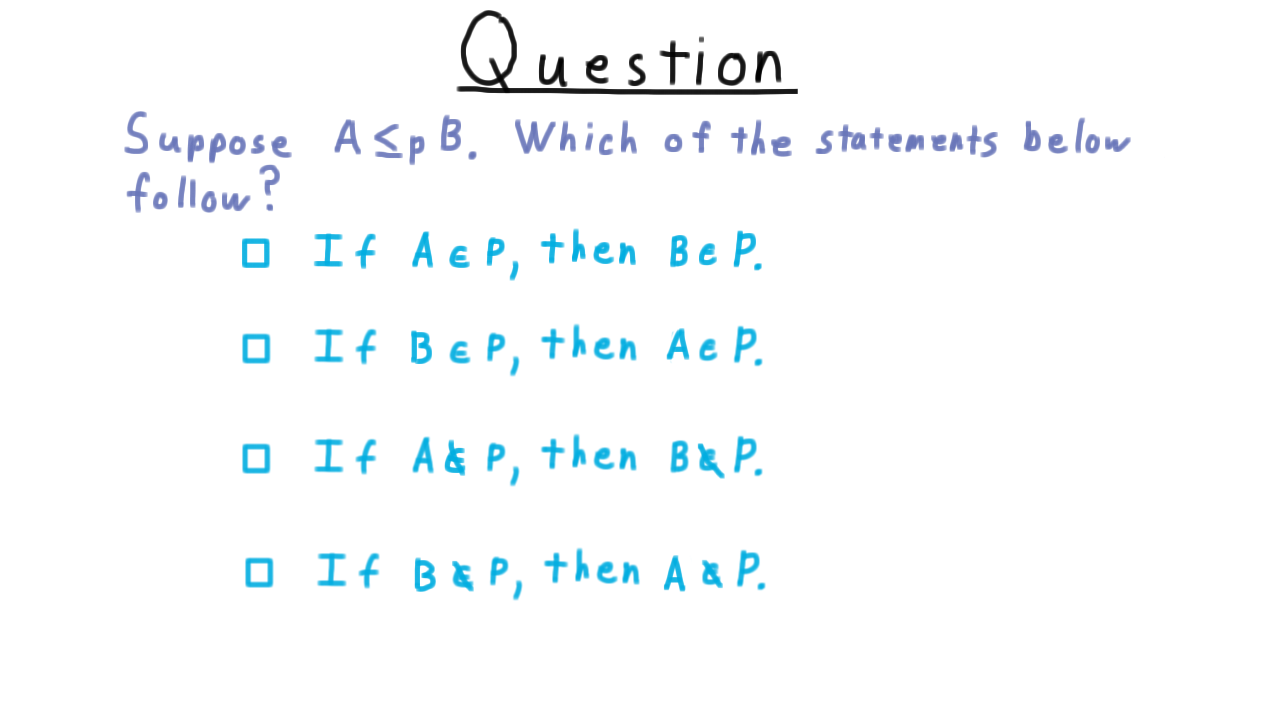

A language \(A\) is mapping-reducible to a language \(B\) ( \(A \leq_M B\) ) if there is a computable function \(f\) where for every string \(w\),

\[w \in A \Longleftrightarrow f(w) \in B.\]

We write this relation between languages with the less-than-or-equal-to sign with a little M on the side to indicate that we are refering to mapping reducibility.

It helps to keep in your mind a picture like this.

On the left, we have the language \(A\), a subset of \(\Sigma^*\) and the right we have the language \(B\), also a subset of \(\Sigma^*\).

In order for the computable function \(f\) to be a reduction, it has to map

- each string in \(A\) to a string in \(B\).

- each string not in \(A\) to a string not in \(B\).

The mapping doesn’t have to be one-to-one or onto; it just has to have this property.

Some Trivial Reductions - (Udacity, Youtube)

Before using reductions to prove that certain languages are undecidable, it sometimes helps to get some practice with the idea of a reduction itself–as a kind of warm-up. With this in mind, we’ve provided a few programming exercises. Good luck!

EVEN <= {’Even’} - (Udacity)

{’John‘} <= Complement of {’John’} - (Udacity)

{’Jane’} <= HALT - (Udacity)

Reductions and (Un)decidability - (Udacity, Youtube)

Now that we understanding reductions, we are ready to use them to help us prove decidability and even more interestingly undecidability.

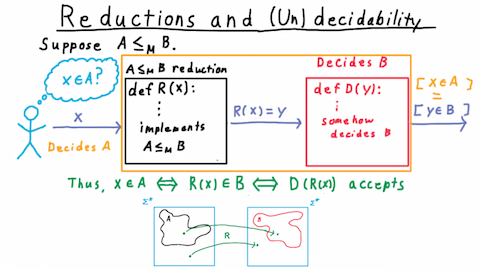

Suppose then that we have a language \(A\) that reduces to \(B\) (i.e. \( A\leq_M B\) ), and, let’s say that I want to know whether some string \(x\) is in \(A\).

If there is a decider for \(B\), then I’m in luck. I can use the reduction, which is a computable function, that takes in the one string and outputs another. I just need to feed in \(x\), take the output, and feed that into the decider for \(B\). If \(B\) accepts, then I know that \(x\) is in \(A.\) If \(B\) rejects, then I know that it isn’t.

This works because by the definition of a reduction \(x\) is in \(A\) if and only if \(R(x)\) is in \(B\). And by the definition of a decider this is true if and only if \(D\) accepts \(R(x)\). Therefore, the output of \(D\) tells me whether \(x\) is in \(A.\) If I can figure out whether an arbitrary string is in \(B\), then by the properties of the reduction,this also lets me figure out whether a string is in \(A\). We can say that the composition of the reduction with the decider for \(B\) is itself a decider for \(A.\)

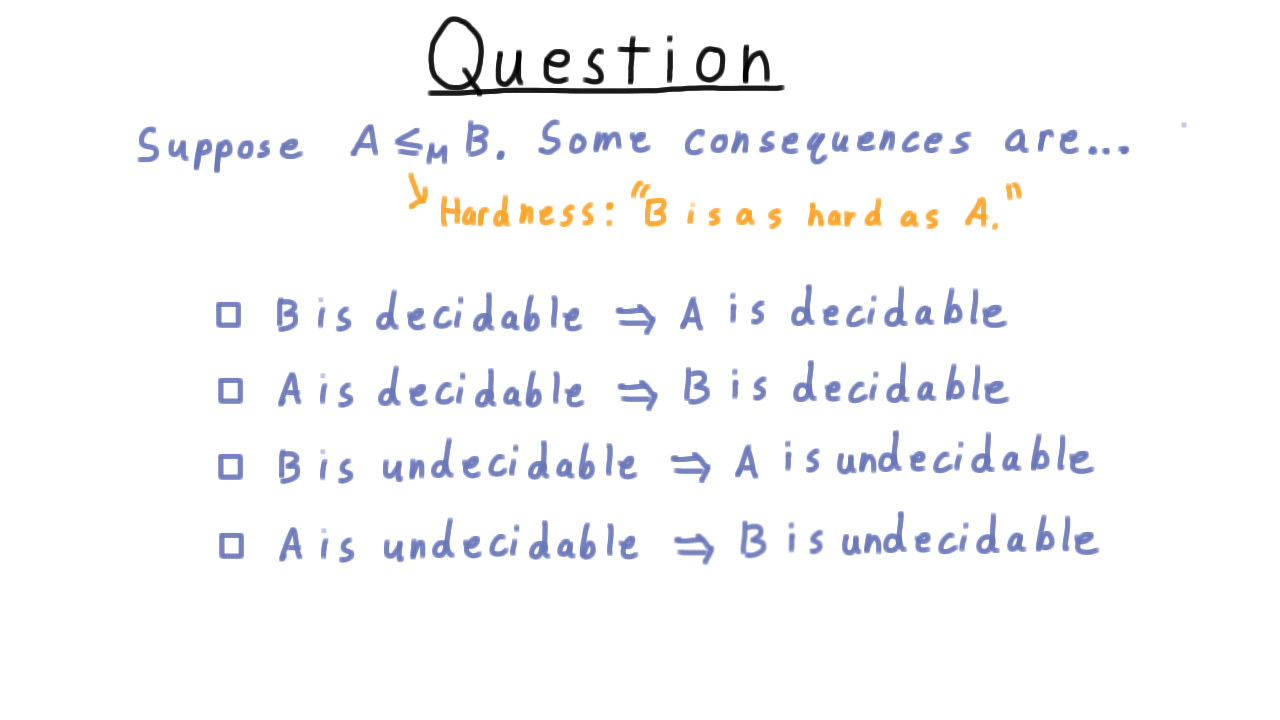

Thus, the fact that \(A\) reduces to \(B\) has four important consequences for decidability and recognizability. The easiest to see are

- If \(B\) is decidable, then \(A\) is also decidable. (As we’ve seen we can just compose the reduction with the decider for \(B.\))

- If \(B\) is recognizable, then \(A\) is also recognizable. (Same logic as above.)

The other two consequences are just the contrapositives of these.

- If \(A\) is undecidable, then B is undecidable. (This composition of the reduction and the decider for B can’t be a decider. Since we are assuming that there is a reduction, the only possibility is that the decider for B doesn’t exist. Hence, B is undecidable.)

- If \(A\) is unrecognizable, then B is unrecognizable. (Same logic as above)

Remember the Consequences - (Udacity)

Let’s do a quick question on the consequences of there being a reduction between two languages.

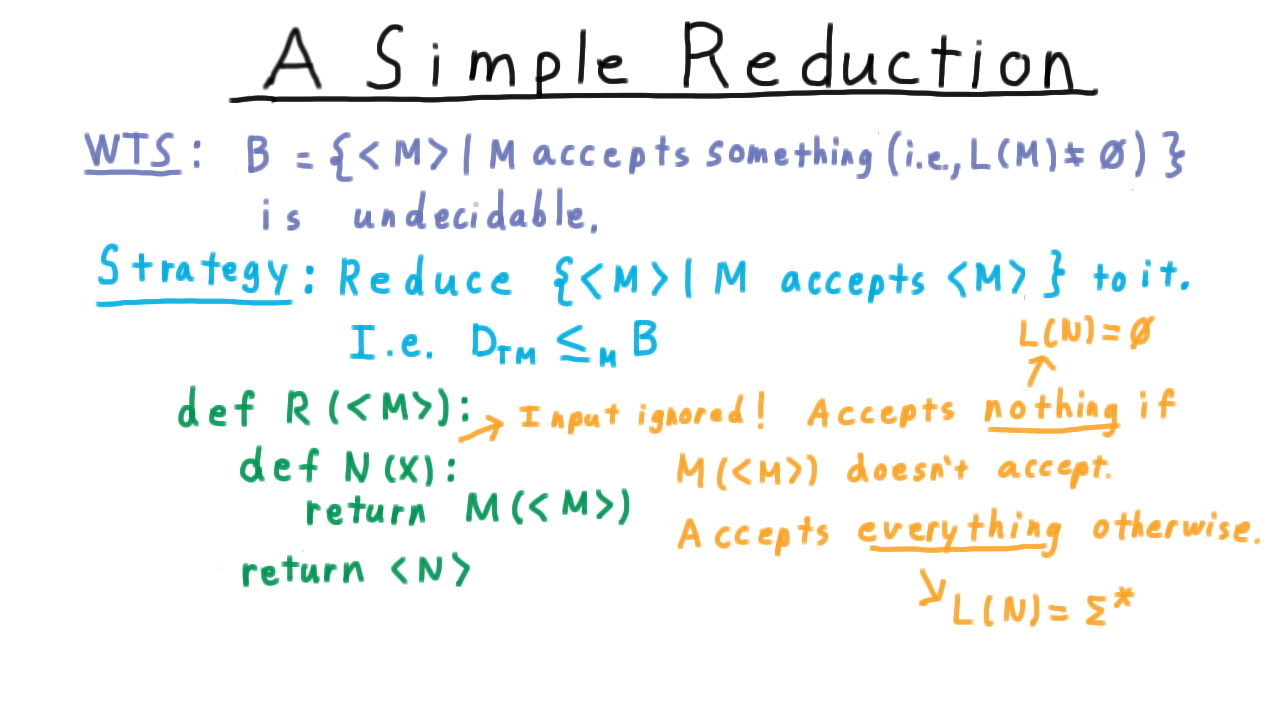

A Simple Reduction - (Udacity, Youtube)

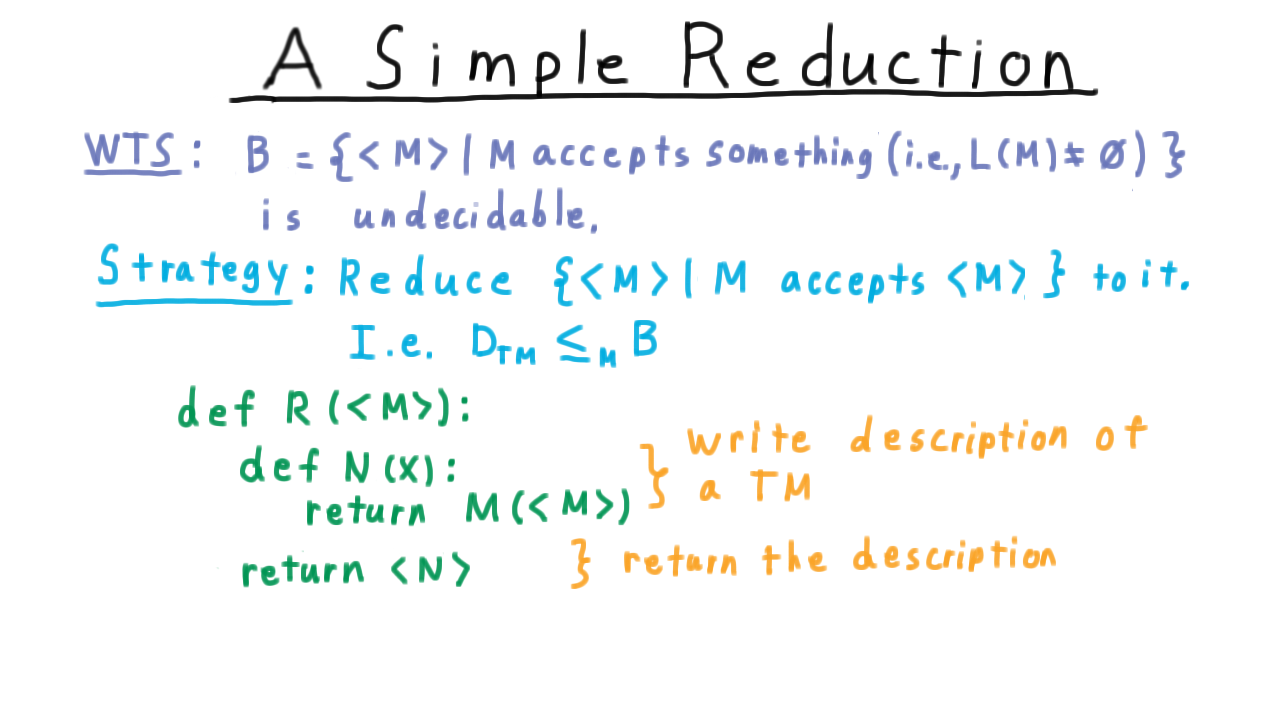

Now we are going to use a simple reduction to show that the language \(B\), consisting of the descriptions of Turing machines that accept something, i.e.

\[ B = \{ < M > | \; L(M) \neq \emptyset \},\]

is undecidable.

Our strategy is to reduce the diagonal language to it. In other words, we’ll argue that deciding \(B\) is at least as hard as deciding the diagonal language. Since we can’t decide the diagonal language, we can’t decide \(B\) either.

Here is one of many possible reductions.

The reduction is a computable function whose input is the description of a machine M, and it’s going to build another machine N in this python-like notation. First, we write down the description of a Turing machine by defining this nested function. Then we return that function. An important point is that the reduction never runs the machine N: it just writes the program for it!

Note here that, in this example, N totally ignores the actual input that is given to it. It just accepts if M(

In other words, the language of N will be the empty set in one case and Sigma-star in the other. A decider for B would be able to tell the difference, and therefore tell us whether M accepted its own description. Therefore, if B had a decider, we would be able to decide the diagonal language, which is impossible. So B cannot be decidable.

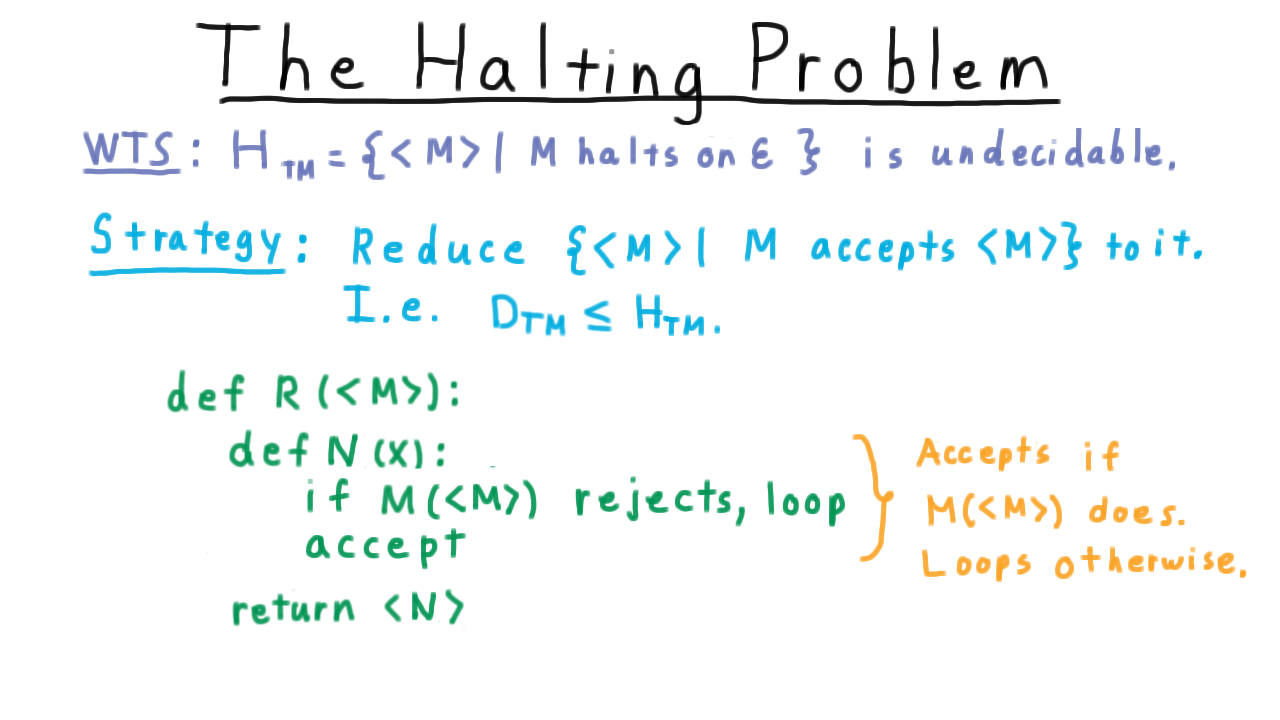

The Halting Problem - (Udacity, Youtube)

Next, we turn to the question of halting. As we have seen, not being able to tell whether a program will halt or not plays a central role in the diagonalization paradox, and it is at least partly intuitive that we can’t tell whether a program is just taking a long time or if it will run forever. It shouldn’t be surprising, then, that given an arbitrary program-input pair, we can’t decide whether the program will halt on that input. But actually, the situation is much more extreme. We can’t even decide if a program will halt when it is given no input at all: just the empty string.

Let’s go ahead prove this:

The language of descriptions of Turing machines that halt on the empty string, i.e.

\[ H_{TM} = \{ < M > |\; M \; \mathrm{halts}\; \mathrm{on} \; \epsilon\}\]

is undecidable.

We’ll do this by reducing from the diagonal language. That is, we’ll show the halting problem is at least as hard as the diagonal problem. Here is one of many possible reductions.

The reduction creates a machine N that simply ignores its input and runs M on itself. If M rejects itself, then N loops. Otherwise, N accepts.

At this point it might seem we’ve just done a bit of symbol manipulation but let’s step back and realize what we’ve just seen. We showed that no Turing machine can tell whether or not a computer program will halt or remain in a loop forever. This is a problem that we care about it and we can’t solve it on a Turing machine or any other kind of computer. You can’t solve the halting problem on your iPhone. You can’t solve the halting problem on your desktop, no matter how many cores you have. You can’t solve the halting problem in the cloud. Even if someone invents a quantum computer, it won’t be able to solve the halting problem. To misquote Nick Selby: “If you want to solve the halting problem, you’re at Georgia Tech but you still can’t do that!”

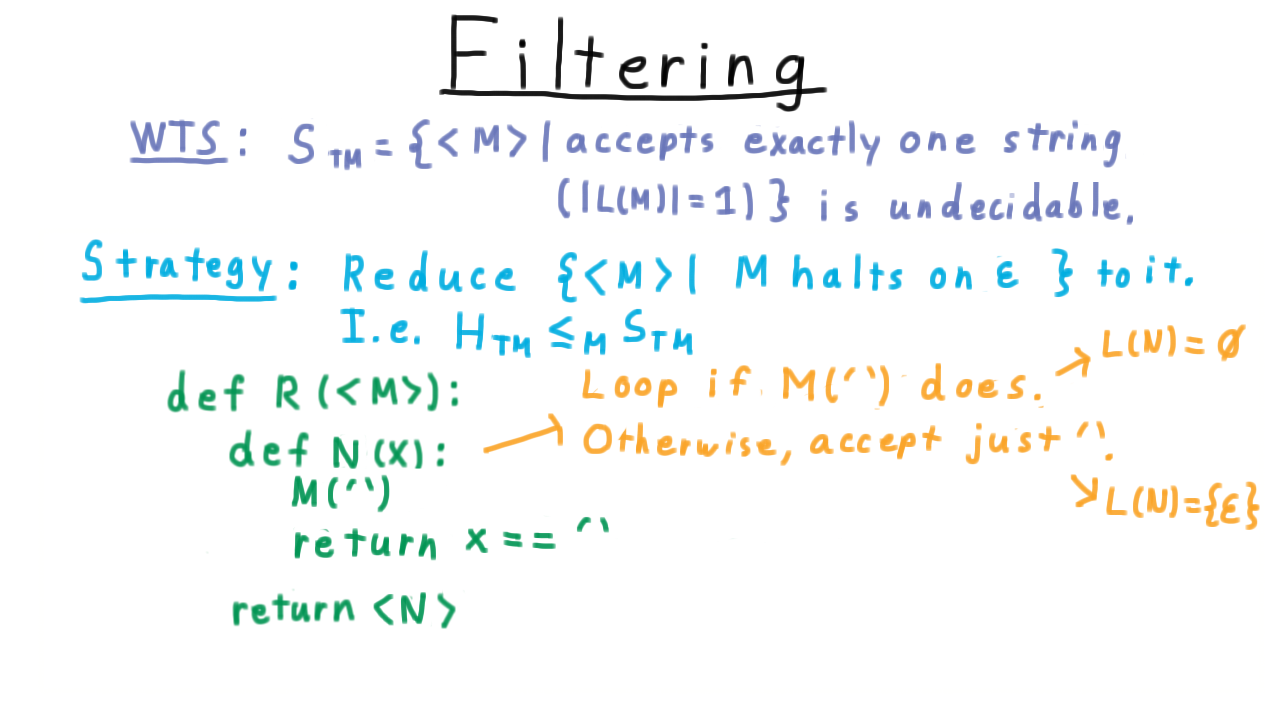

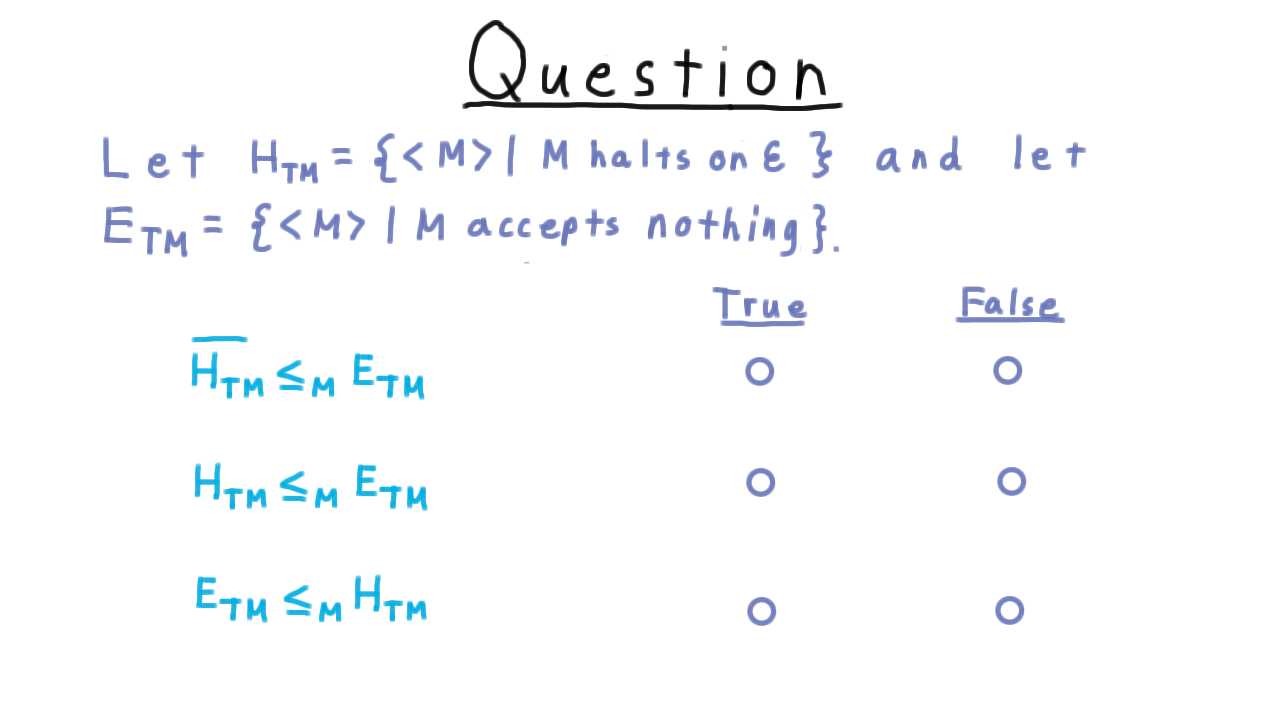

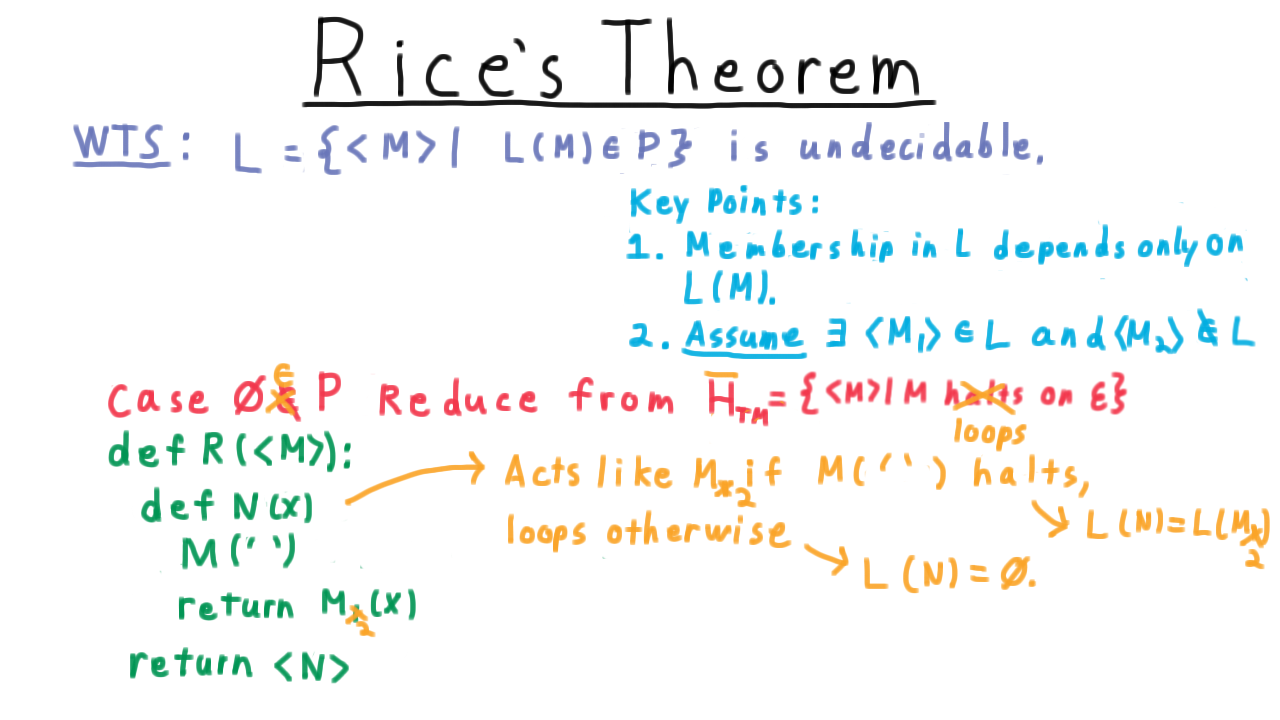

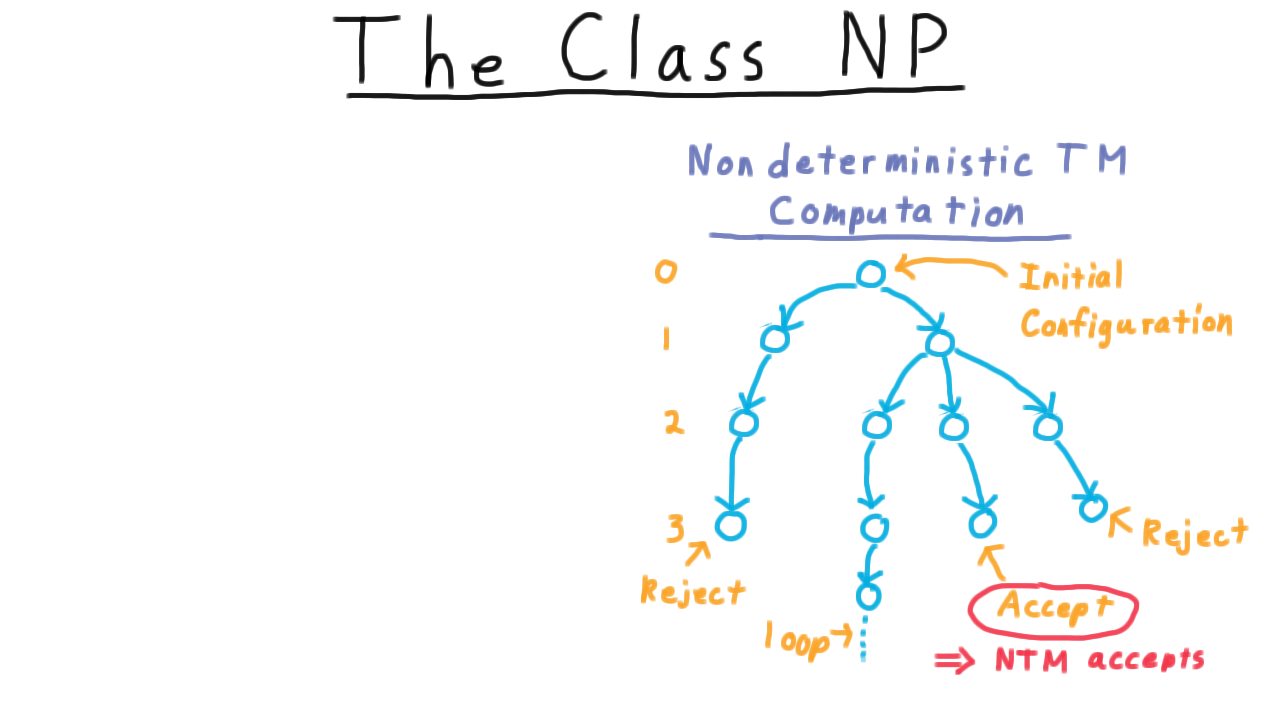

Filtering - (Udacity, Youtube)